Attacking LLMs

Learn to identify and exploit LLM vulnerabilities, covering prompt injection, insecure output handling, and model poisoning.

In this module, we cover practical attacks against systems that use large language models, including prompt injection, unsafe output handling, and model poisoning . You will learn how crafted inputs and careless handling of model output can expose secrets or trigger unauthorised actions, and how poisoned training data can cause persistent failures. Each topic includes hands-on exercises and realistic scenarios that show how small issues can be linked into larger attack paths. By the end, participants can build concise proof of concept attacks and suggest clear, practical mitigations.

0%

Input Manipulation & Prompt Injection

Understand the basics of LLM Prompt Injection attacks.

0%

LLM Output Handling and Privacy Risks

Learn how LLMs handle their output and the privacy risks behind it.

0%

Data Integrity & Model Poisoning

Understand how supply chain and model poisoning attacks can corrupt the underlying LLM.

0%

Juicy

A friendly golden retriever who answers your questions.

0%

BankGPT

A customer service assistant used by a banking system.

0%

HealthGPT

A safety-compliant AI assistant that has strict rules against revealing sensitive internal data.

Need to know

Advanced Server-Side Attacks

Master the skills of advanced server-side attacks, covering SSRF, File Inclusions, Deserialization, Race Conditions, and Prototype Pollution.

Advanced Client-Side Attacks

Through real-world scenarios, you will gain a detailed understanding of client-side attacks, including XSS, CSRF, DOM-based vectors, SOP, and CORS vulnerabilities.

Web Hacking Fundamentals

Understand the core security issues with web applications, and learn how to exploit them using industry tools and techniques.

Next steps

Custom Tooling

Build and automate custom tools using Python, Burp Suite, and browser automation to exploit and test web applications efficiently.

Bypassing WAF

Master techniques to understand, exploit, and bypass WAFs, covering signature and pattern bypasses, parsing and normalisation evasion, protocol manipulation, and exploiting weak/outdated configurations.

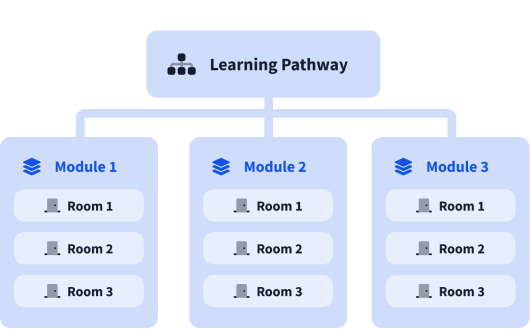

What are modules?

A learning pathway is made up of modules, and a module is made of bite-sized rooms (think of a room like a mini security lab).