To access material, start machines and answer questions login.

Get started with Cyber Security in 25 days, by learning the basics and completing a new, beginner friendly security exercise every day leading up until Christmas; an advent calendar but with security challenges and not chocolate.

Also, everyday you complete a challenge, you get entered into another prize draw for the chance to win a mini-prize. The "daily prizes" are done at the end of the week. Complete every task in the event and earn a certificate of completion! Make sure your name is set in your settings.

View Sample Certificate

|

|

PortSwigger are the creators of Burp Suite, the world's leading web application security testing toolkit. Their center of excellence is the PortSwigger Research team, where they discover and exploit vulnerabilities, then feed their findings back into Burp Suite products. |

|

Videos Featuring

Each task released has a supporting video walkthrough, from a range of amazing and highly talented creators, including:

CyberSecMeg, John Hammond, Tib3rius, Neal Bridges, HuskyHacks, InsiderPhD, and NahamSec!

Topics Include

Topics that will be covered in the event are

|

|

|

|

Follow us on LinkedIn!

Follow us on Twitter!

Check out the subreddit!

The Advent of Cyber event is completely free! However, check out some of the reasons to subscribe:

If you want to gift a TryHackMe VIP subscription, you can purchase vouchers.

In celebration of Advent of Cyber, you can get 20% off the annual subscription using the discount code AOC2021 at checkout. This discount is only valid until the 7th of December, that's in:

Advent of Cyber is a perfect event for introducing and upskilling many different teams within your company in cyber, through this fun 25-day event!

With TryHackMe's management dashboard for business, teams in your company can tackle the daily challenges together, where you can monitor their progress and give them a premium learning experience.

Christmas Swag

Want to rep swag from your favorite cyber security training platform?

We have a special edition Christmas Advent of Cyber t-shirt. Buy from our swag store.

To access target machines you deploy on TryHackMe will need to either, use an OpenVPN client, or deploy your own web-based AttackBox (you can do this by clicking the blue "Start AttackBox" button at the top of this page). We highly recommend you complete the Tutorial room to learn more about getting connected.

Using the web-based AttackBox, you can complete all of these exercises through your browser. If you're a regular user, you can deploy the AttackBox for free 1 hour a day, if you're subscribed you can deploy it for an unlimited amount of time!

Rules

Breaking any of the following rules will result in elimination from the competition

- *.tryhackme.com and the OpenVPN server is off-limits to probing, scanning, or exploiting.

- Users are only authorized to hack machines that have been deployed in the rooms they have access to.

- Users are not to target or attack other users

- Users should only enter the event once, using one account.

- Answers to questions are not to be shared; unless shown on videos/streams.

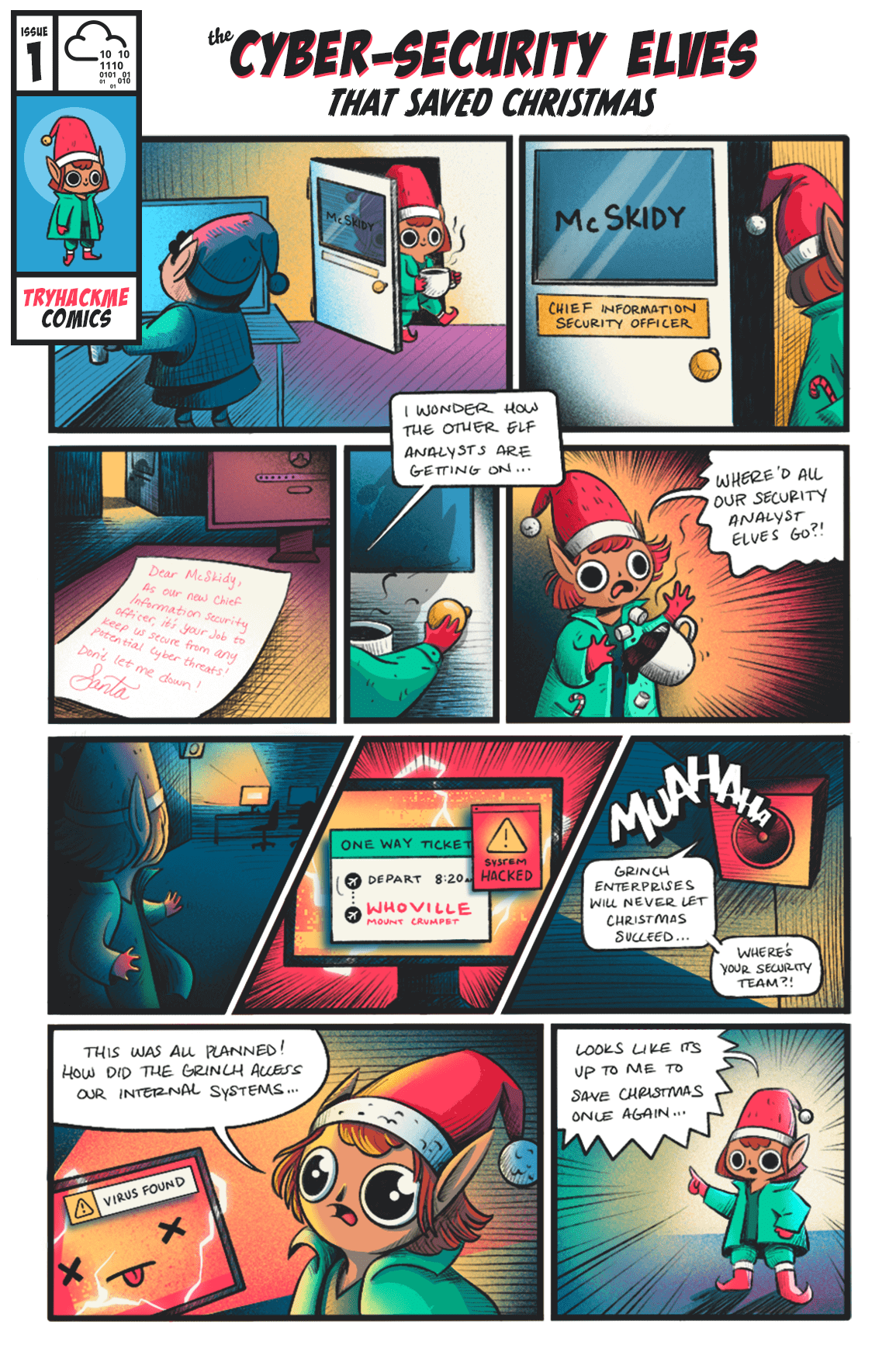

[The Christmas Story]

All exercises in Advent of Cyber follow a fun Christmas story. This year, the elf McSkidy needs your help to hack back and undo the grinch's malicious activities.

It's the eve of 30th November - McSkidy sits in her large office with a cup of hot cocoa, reminiscing over her stressful times at the Best Festival Company. Since her management of the Christmas Monster's cyber attacks last December, she'd been promoted to Chief Information Security Office (CISO) and has managed to build a world-class security team. She made a promise to never let Christmas get affected by cyber incidents and has done everything in her power to prepare the best festival company for any incidents, and assist Santa in delivering presents globally with no disruptions!

As she grins to herself "After all we've done, what could go wrong", Elf McAssistant runs into her office and gasps "All our security analysts have missed their last shift and no security personnel can be found in the building". McSkidy jumps out of her chair and spills her hot cocoa all over herself "WHAT".

She swiftly moves over to the Elf Security Center housing the security personnel and looks over the large area filled with empty desks. Where did everyone go on the eve of the most important time for the Best Festival Company. She rushes over to the desk of the head of her security analyst team, Elf McLeader, and notices the desk is surprisingly clean. For someone so messy, how is his work area completely empty? As she started theorising in her head, she noticed a small piece of paper hidden at the back of the desk behind the screen. As she made sense of what was on the paper, her eyes widened - why did McProfessional book a one-way flight ticket away on this exact day!

Before she had time to make any assumptions, a loud, grumpy voice was resonating across the security center from the internal announcement systems "Grinch Enterprises will never let Christmas succeed. It would be a shame if your world-class security team just suddenly disappeared"

"THIS WAS ALL PLANNED - HOW DID THEY ACCESS OUR INTERNAL SYSTEMS" cried McSkidy. Their intelligence team had prepared for this exact scenario but it didn't help that the security center was completely empty!

"This needs to stop happening" sighed McSkidy and dragged herself to the office to save Christmas

Please note, tasks are released daily and will vary in difficulty (although will always be aimed at a beginner level)

The Christmas story is used within some of the tasks, so make sure you read the above.

Story

The inventory management systems used to create the gifts have been tampered with to frustrate the elves. It's a night shift, and McStocker comes to McSkidy panicking about the gifts all being built wrong. With no managers around to fix the issue, McSkidy needs to somehow get access and fix the system and keep everything on track to be ready for Christmas!

Learning Objectives

- What is an IDOR vulnerability?

- How do I find and exploit IDOR vulnerabilities?

- Challenge Walkthrough.

What is an IDOR vulnerability?

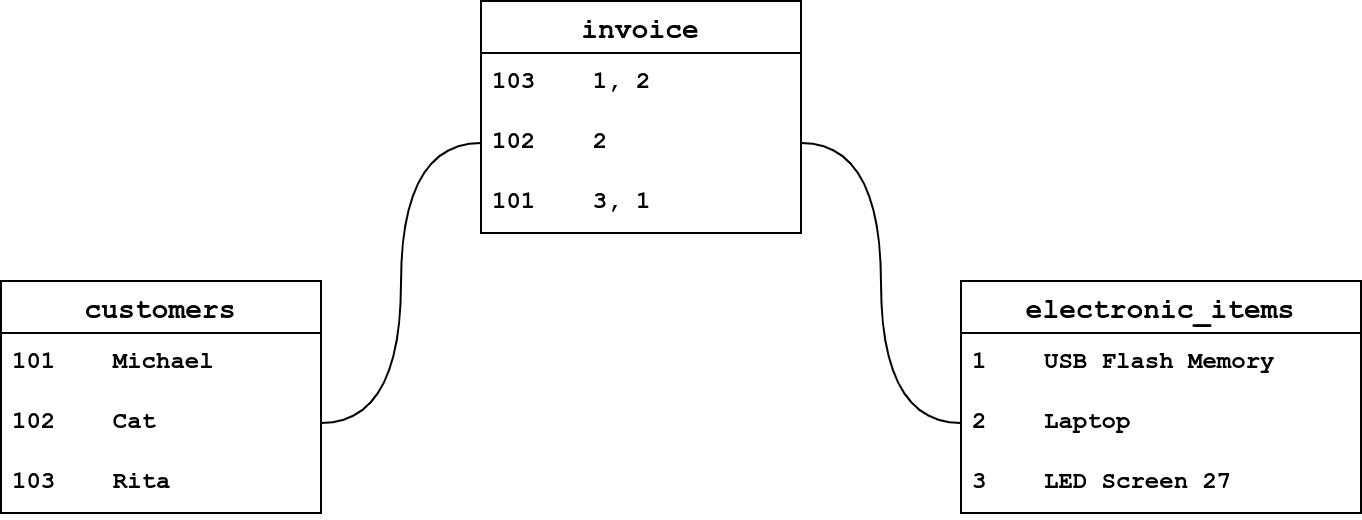

IDOR stands for Insecure Direct Object Reference and is a type of access control vulnerability. An access control vulnerability is when an attacker can gain access to information or actions not intended for them. An IDOR vulnerability can occur when a web server receives user-supplied input to retrieve objects (files, data, documents), and too much trust has been placed on that input data, and the web application does not validate whether the user should, in fact, have access to the requested object.

How do I find and exploit IDOR vulnerabilities?

As previously mentioned, an IDOR vulnerability relies on changing user-supplied data. This user-supplied data can mainly be found in the following three places:

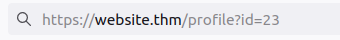

Query Component:

Query component data is passed in the URL when making a request to a website. Take, for instance, the following screenshot of a URL.

We can breakdown this URL into the following:

Protocol: https://

Domain: website.thm

Page: /profile

Query Component: id=23

Here we can see the /profile page is being requested, and the parameter id with the value of 23 is being passed in the query component. This page could potentially be showing us personal user information, and by changing the id parameter to another value, we could view other users data.

Post Variables:

Examining the contents of forms on a website can sometimes reveal fields that could be vulnerable to IDOR exploitation. Take, for example, the following HTML code for a form that updates a user's password.

<form method="POST" action="/update-password">

<input type="hidden" name"user_id" value="123">

<div>New Password:</div>

<div><input type="password" name="new_password"></div>

<div><input type="submit" value="Change Password">

</form>

You can see from the highlighted line that the user's id is being passed to the webserver in a hidden field. Changing the value of this field from 123 to another user_id may result in changing the password for another user's account.

Cookies:

To stay logged into a website such as this one, cookies are used to remember your session. Usually, this will involve sending a session id which is a long string of random hard to guess text such as 5db28452c4161cf88c6f33e57b62a357, which the webserver securely uses to retrieve your user information and validate your session. Sometimes though, less experienced developers may store user information in the cookie its self, such as the user's ID. Changing the value of this cookie could result in displaying another user's information. See below for an example of how this might look.

GET /user-information HTTP/1.1

Host: website.thm

Cookie: user_id=9

User-Agent: Mozilla/5.0 (Ubuntu;Linux) Firefox/94.0

Hello Jon!

GET /user-information HTTP/1.1

Host: website.thm

Cookie: user_id=5

User-Agent: Mozilla/5.0 (Ubuntu;Linux) Firefox/94.0

Hello Martin!

IDOR in the wild

Seeing a product, user, or service identifier in the URL or otherwise is a must to test. IDOR vulnerabilities can reveal sensitive information, as well as potentially giving you access to usually restricted site functionality. For security researchers, IDOR vulnerabilities can be impactful, and reporting them can yield a good bug bounty; see this article, where an IDOR vulnerability report to PayPal had a $10,500 payout.

Challenge Walkthrough

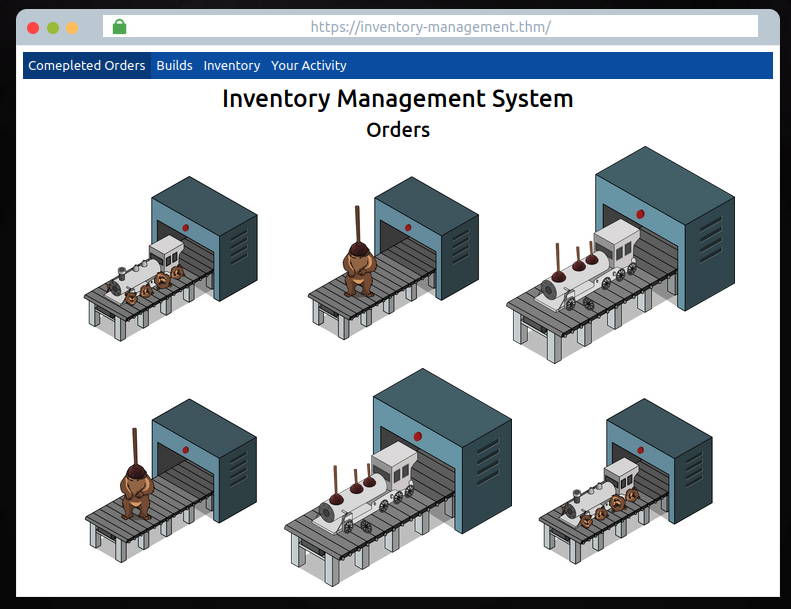

Click the green "View Site" button at the top right of this task to open up the inventory management system.

Here you'll find a mock browser on the completed orders page showing images of the toys which have been made incorrectly due to the Grinch's tampering!

There are also three other pages on the navigation panel; Builds, Inventory and Your Activity.

- The builds page shows different toys and the parts they are made up of (as you can see, due to tampering, these are all incorrect)

- The Inventory page lists the individual items with their corresponding SKU codes.

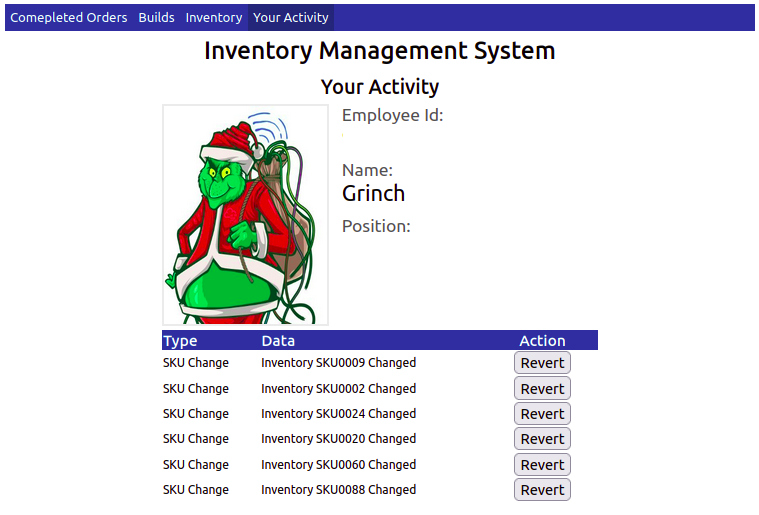

- The Your Activity page displays McSkidy's user information, photo and their recent actions on the system.

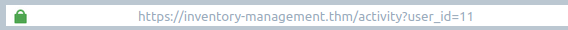

As we learnt above, an IDOR vulnerability requires changing some kind of user input. Out of all the pages we can navigate to, the only page that has input that can be altered is on the Your Activity page. You'll see in the URL a query component parameter named user_id, which is set to the value of 11 (McSkidy's User Id).

Try changing the user_id value in the address bar, and you'll see that the web application tries to load another user's information. Try different numbers between the values 1 - 20 until you find a user who could have been responsible for the tampering on the system.

Clicking on the Revert button on the user's actions will roll back the changes and allow the toy-making machine to make properly built toys again. Once all the changes have been reverted, you'll be rewarded with a flag which can be entered below.

After finding Santa's account, what is their position in the company?

After finding McStocker's account, what is their position in the company?

After finding the account responsible for tampering, what is their position in the company?

What is the received flag when McSkidy fixes the Inventory Management System?

If you want to learn more about IDOR vulnerabilities, we suggest trying out this room https://tryhackme.com/room/idor

Tasks released each day get progressively harder (but are still guided with walkthrough videos). Come back tomorrow for Day 2's task!

Check out John Hammond's walkthrough video for day 2 here

Story

McSkidy needs to check if any other employee elves have left/been affected by Grinch Industries attack, but the systems that hold the employee information have been hacked. Can you hack them back to determine if the other teams in the Best Festival Company have been affected?

Learning Objectives

- Understanding the underlying technology of web servers and how the web communicates.

- Understand what cookies are and their purpose.

- Learn how to manipulate and manage cookies for malicious use.

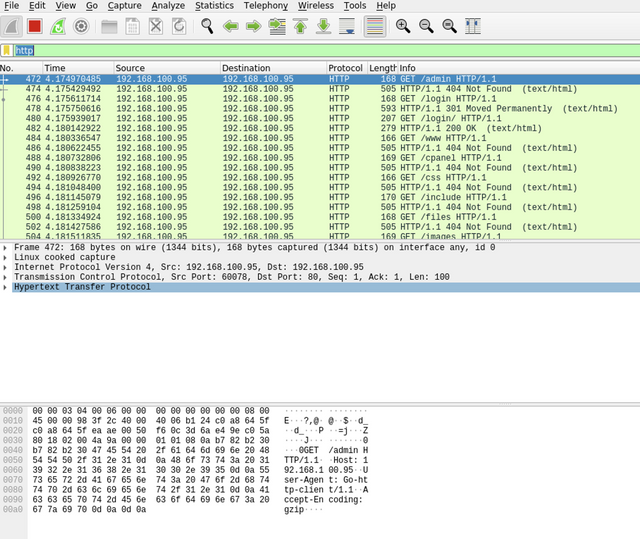

HTTP(S)

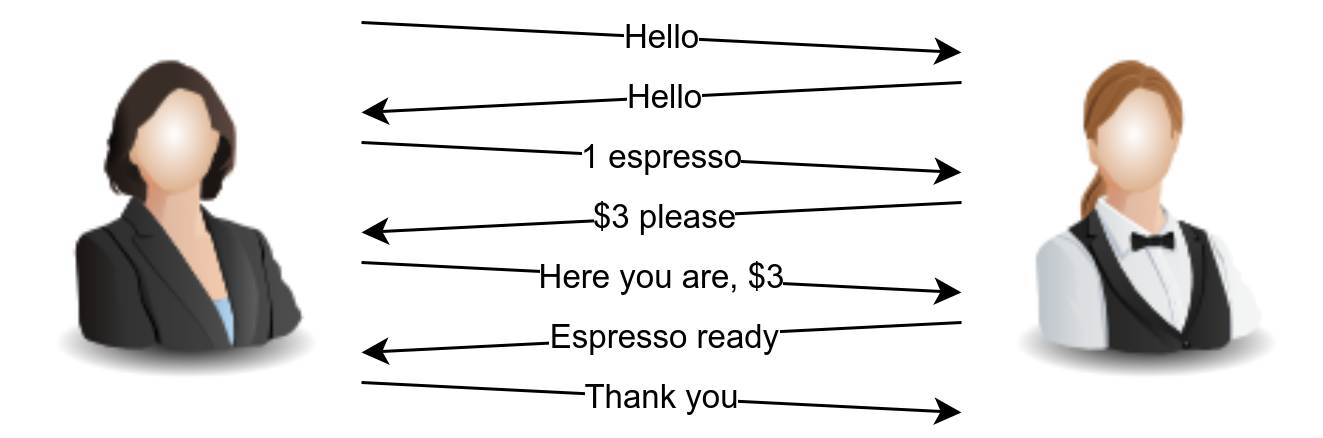

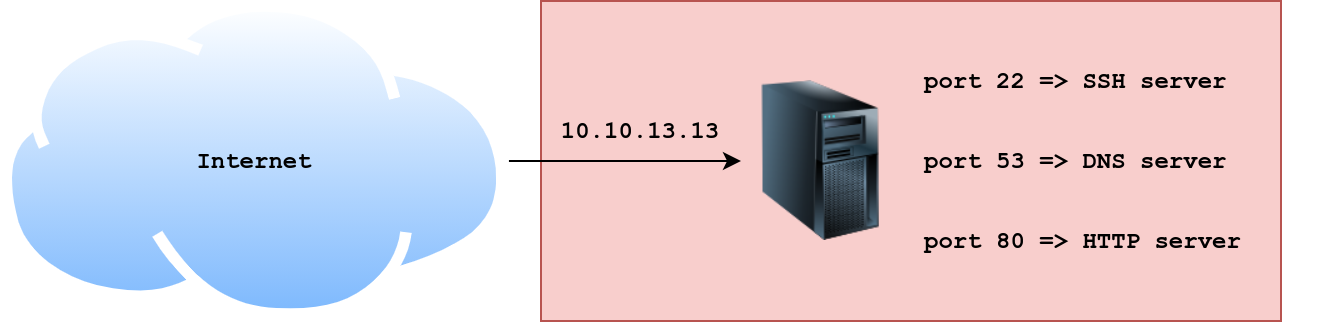

For your computer and a webserver to communicate with each other, an intermediary protocol is required. This is where the HTTP (Hypertext Transfer Protocol) is introduced! The HTTP protocol is a client-server protocol to provide communication between a client and a webserver. HTTP requests are similar to a standard TCP network request; however, HTTP adds specific headers to the request to identify the protocol and other information.

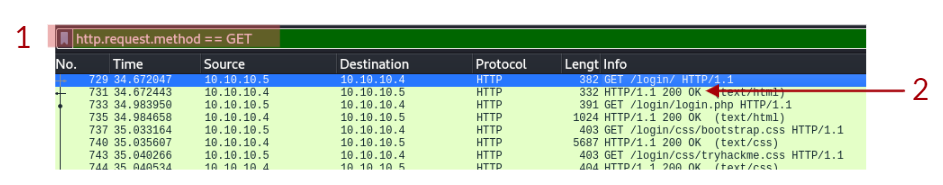

When an HTTP request is crafted, the method and target header will always be included. The target header will specify what to retrieve from the server, and the method header will specify how.

When retrieving information from a web server, it is common to use the GET method, such as loading a picture.

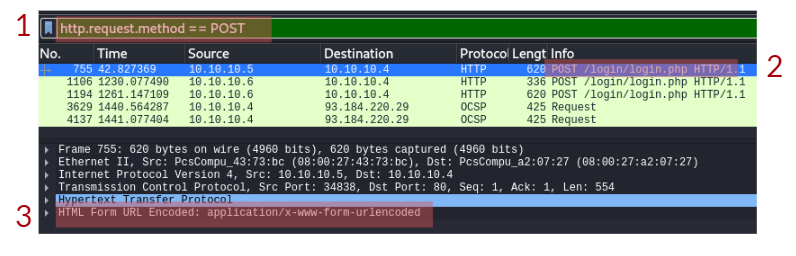

When sending data to a web server, it is common to use the POST method, such as sending login information.

Example Request

GET / HTTP/1.1

Host: tryhackme.com

User-Agent: Mozilla/5.0 Firefox/87.0

Referer: https://tryhackme.com/Once the server receives a request, it will send back a response, including any requested content if successful and a status code. The status code is used to tell the client browser how the webserver interpreted the request. The most common "successful" status code is HTTP 200 OK.

Example Response

HTTP/1.1 200 OK

Server: nginx/1.15.8

Date: Wednesday, 24 Nov 2021 13:34:03 GMT

Content-Type: text/html

Content-Length: 98

<html>

<head>

<title>Advent of Cyber</title>

</head>

<body>

Welcome To Advent of Cyber!

</body>

</html>The protocol itself is only one small piece of the puzzle; once content is retrieved from the web server, your browser needs a way to interpret and render the information sent. Web applications are commonly formatted in HTML (HyperText Markup Language), rendered, and styled in CSS (Cascading Style Sheets). JavaScript is also commonly used to provide additional functionality.

In today's web environment, the use of web frameworks has significantly increased in popularity. Most modern web applications use many web frameworks and other web solutions that an end-user does not see or interact with.

For more information about HTTP requests, methods, and headers, check out the Web Fundamentals room!

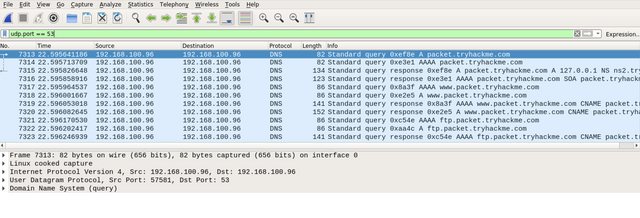

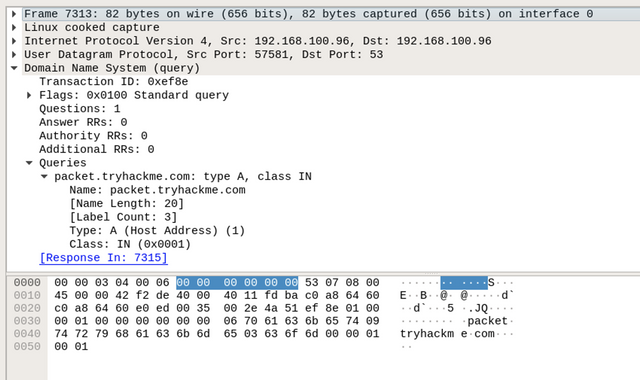

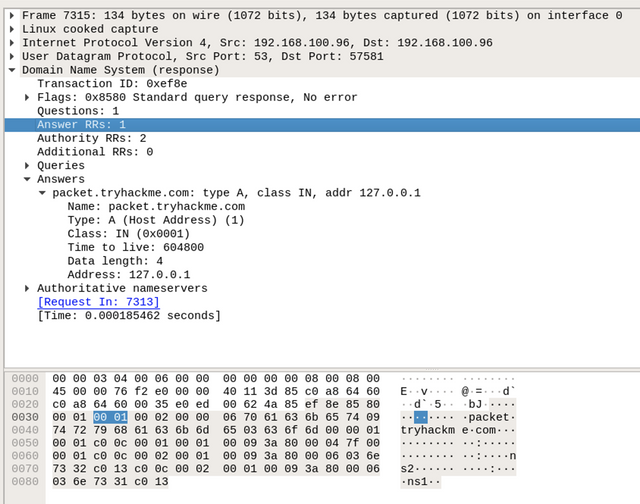

Cookies

HTTP is a stateless protocol. When you send requests to a web server, the server cannot distinguish your request from someone else's request.. To solve the stateless problem and identify different users and access levels, the webserver will assign cookies to create and manage a stateful session between client and server.

Cookies are tiny pieces of data (metadata) or information locally stored on your computer that are sent to the server when you make a request.

Cookies can be assigned any name and any value allowing the webserver to store any information it wants. Today we will be focusing on authentication cookies, also known as session cookies. Authentication or session cookies are used to identify you and what access level is attached to your session.

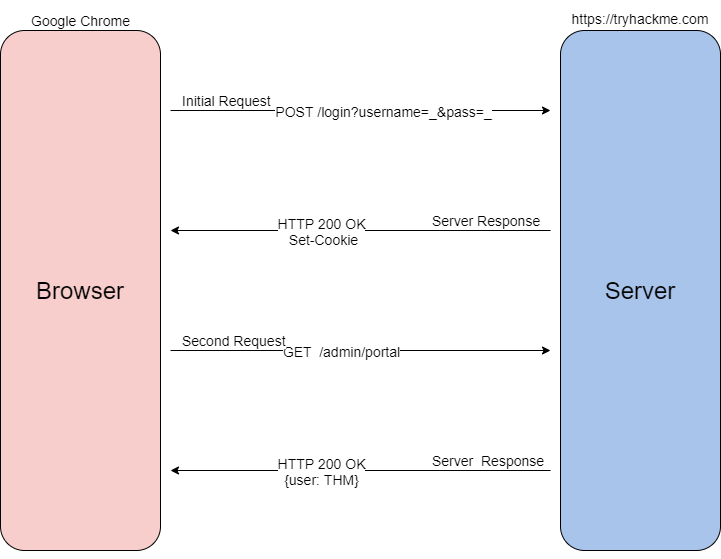

Below is a diagram describing assigning and using a cookie from the initial request to the session request.

To begin the process, when you send a request such as a login request, your browser will send that information typically as a POST request to the webserver. The web server will verify that it received the data and set a unique cookie; as previously mentioned, cookies are arbitrary, and values are determined by best-practice or the web developer. Once the cookie is assigned, as long as the cookie stays locally stored in your browser, all future GET requests will be automatically sent with that cookie to identify you and your access level. Once the server receives your GET request and cookie, it will locate and de-serialize your session. Deserialization is the process of taking a data format such as JSON and rebuilding it as an object. If successful, the webserver will reply to your request with a 200 response.

Now that we understand what cookies are and how they are used, let us dive into their contents.

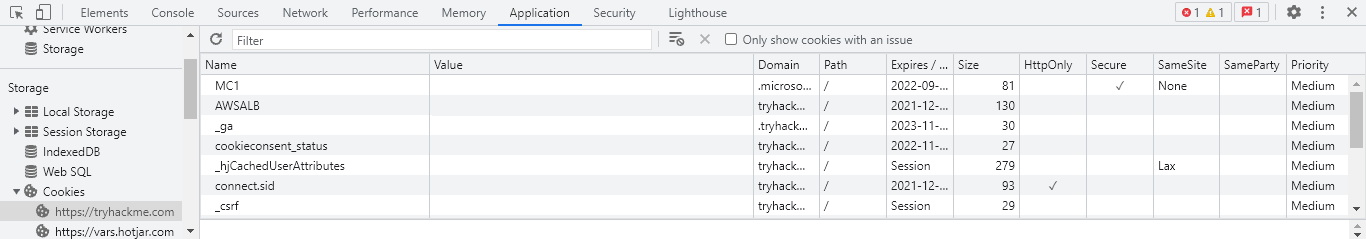

Cookie Components

Cookies are made up of 11 different components; you can find an explanation of each component in the table below.

| Component | Purpose | Example |

| Name | Unique cookie identifier (arbitrarily set by web-server). Always paired with the value component. | SessionID |

| Value | Unique cookie value (arbitrarily set by web-server). Always paired with the name component | sty1z3kz11mpqxjv648mqwlx4ginpt6c |

| Domain | Originating domain of the cookie. Sets the scope of the cookie. | .tryhackme.com |

| Path | Local path to cookie. Sets the scope of the cookie. | / |

| Expires/Max-age | Date/time at which cookies expires | 2022-11-11T15:39:04.166Z |

| Size | Disk size of the cookie in bytes. This is typically {Name+Value} | 91 |

| HttpOnly | Cookie cannot be accessed through client-side scripts | (indicated by a checkmark) |

| Secure | Cookie is only sent over HTTPS | (indicated by a checkmark) |

| SameSite | Specifies when a cookie is sent through cross-site requests | none |

| SameParty | Extends functionality of SameSite attribute to First-Party sets. | (indicated by a checkmark) |

| Priority | Defines the importance of a cookie. Determines whether it should be removed or held on to | High |

Looking at all the components of a cookie may seem intimidating. There is no need to worry as attackers; we only need to concern ourselves with two components: Name and Value; the rest of the components are handled by the webserver.

Cookie components are always prepared in pairs. The main pair is name-value; this will define the name of the cookie and the value of the name. The second pair is the attribute-value pair; this will define an attribute of the cookie and the value of the attribute. Below is an example of what Set-Cookie syntax looks like.

Set-Cookie: <cookie-name>=<cookie-value>; Domain=<domain-value>; Secure; HttpOnly

Cookie Manipulation

Cookie manipulation is taking a cookie and modifying it to obtain unintended behavior determined by the web developer. Cookie manipulation is possible because cookies are stored locally on your host system, meaning you have complete control over them and modify them as you please.

To begin modifying and manipulating cookies, we need to open our developer tools. In Google Chrome, developer tools are known as the "Chrome Developer Tools," and in Mozilla Firefox, they are known as the "Firefox Developer Tools."

Developer tools can be accessed by pressing F12 or Ctrl+Shift+I. Once developer tools are open, to access your cookies, navigate to the Storage tab in Firefox or Application tab in Chrome/Edge; select the Cookies dropdown on the left-hand side of the console.

Cookie values may seem random at first; however, they often have an encoded value or meaning behind them that can be decoded to a non-arbitrary value such as a Javascript object.

From an attacker's perspective, you can decode the cookie value to identify the underlying objects. Once you have identified the underlying objects, you can modify them to what you want. To use the cookie, you will need to encode it back to the original encoding and replace the cookie value. Below is an example of a decoded cookie value.

{firstName:"John", lastName:"Doe", age:50, eyeColor:"blue"

Now that we have all of the pieces of cookies and how to manipulate them, we can put them all together to gain unintended access.

Below is a summary of how cookie values could be manipulated.

- Obtain a cookie value from registering or signing up for an account.

- Decode the cookie value.

- Identify the object notation or structure of the cookie.

- Change the parameters inside the object to a different parameter with a higher privilege level, such as admin or administrator.

- Re-encode the cookie and insert the cookie into the value space; this can be done by double-clicking the value box.

- Action the cookie; this can be done by refreshing the page or logging in.

Additional Resources

For more information about HTTP(s) and cookies, check out these other TryHackMe rooms.

- HTTP: https://tryhackme.com/jr/httpindetail

- Authentication: https://tryhackme.com/jr/authenticationbypass

If you're stuck solving the challenge, check out the walkthrough video here

Register an account, and verify the cookies using the Developer Tools in your browser.

What is the name of the new cookie that was created for your account?

What encoding type was used for the cookie value?

What object format is the data of the cookie stored in?

Manipulate the cookie and bypass the login portal.

What is the value of the administrator cookie? (username = admin)

What team environment is not responding?

What team environment has a network warning?

If you want to learn more about Authentication bypasses, we suggest trying out this room https://tryhackme.com/jr/authenticationbypass

Tasks released each day get progressively harder (but are still guided with walkthrough videos). Come back tomorrow for Day 3's task, where InsiderPHD will be recording a video walkthrough!

Grinch Enterprises have also tried to block communication between anyone at the company. They've locked everyone out of their email systems and McSysAdmin has also lost access to their admin panel. Can you find the admin panel and help restore communication for the Best Festival Company.

Check out InsiderPHD's walkthrough video for day 3 here

Learning Objectives

In today's task, we're going to be using our investigatory skills and techniques to discover un-listed content, and attempt some common authentication using the clues around us.

What is Content Discovery & Why is it Useful?

Before we begin looking for content, let's define what "content" actually is. Content is the assets and inner workings of the application that we are testing. Contents can be files, folders, or pathways that weren't necessarily intended to be accessed by the general public.

For example, you may have a blog, where you post about your tasty treats! You want everyone to view all of your delicious snacks, but you don't want everyone to be able to manage what delicious snacks are up for review - You may hide the administrator panel away from the public!

Let's expand on this. Web servers, unless configured otherwise, are designed to serve these files and folders, as long as you know the names.

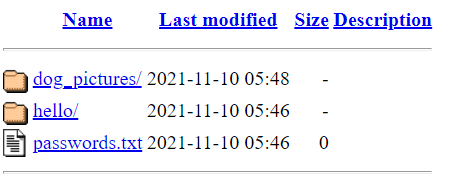

Content discovery is a useful technique to have in our arsenal because it allows us to find things that we aren't supposed to see. For example, we may be able to find:

- Configuration files

- Passwords and secrets

- Backups

- Content management systems

- Administrator dashboards or portals

These are just some examples of the types of information that we may be able to uncover by discovering content. We can find these pieces of information because they are stored in either a folder (which will have a name) or a file (which will have both a name and extension). This means that we can search for files by their extension, for example, discovering text files with the extension of .txt.

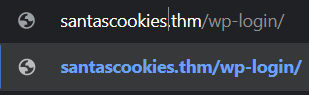

You can do this manually by thinking of some names such as "admin" or "passwords.txt" and navigating to it in your browser. However, we can use tools to do this process for us at a considerably faster rate. Enter Dirbuster. Dirbuster is a tool that we can use to automate this process for us. The tool works by accepting a wordlist which is a file containing everything that we want to search for, and then a few other arguments. Let's demonstrate this into the snippets below:

cmnatic@thm$ cat wordlist.txt

admin/

docs/

config/

install/

install-en/

Dirbuster will scan the website "santascookies.thm" for the folders listed within this wordlist. To use Dirbuster to discover content on this site, we would use the command dirb and provide some information such as the URL of the website and the location of the word list. Our final command would look like something similar to the snippet below::

cmnatic@thm$ dirb http://santascookies.thm/ /usr/share/wordlists/mywordlist.txt

In the above, we have provided Dirbuster the following commands:

- The URL of the website

- The location (full path) on our attacking machine of our wordlist. If you are unfamiliar with this, check out Linux Fundamentals.

Now, once we execute this command, Dirbuster will search for the existence of a directory on the website using every value in the wordlist that we have created.

cmnatic@thm:/usr/share/wordlists/dirb# ls

big.txt euskera.txt mutations_common.txt spanish.txt

catala.txt extensions_common.txt others stress

common.txt indexes.txt small.txt vulns

Your ability to discover content is only as good as your wordlist. You will find another collection of open-source wordlist such as SecLists, where you may be able to use a combination of context and wordlist to discover content.

Default Credentials

Web applications and services often come with a default pair of credentials. Developers leave these credentials in place so that you can quickly get started with the platform (with the hopes that you will change them). However, as you'll come to discover, not everyone does, or in fact, applications often include other accounts that are not well documented. SecLists also provide a wordlist for default credentials, which you can find here.

For example, take a guess at using some common usernames and password combinations for privileged users. This could include:

| Count | Username | Password |

| #1 | administrator | administrator |

| #2 | administrator | password123 |

| #3 | administrator | letmein |

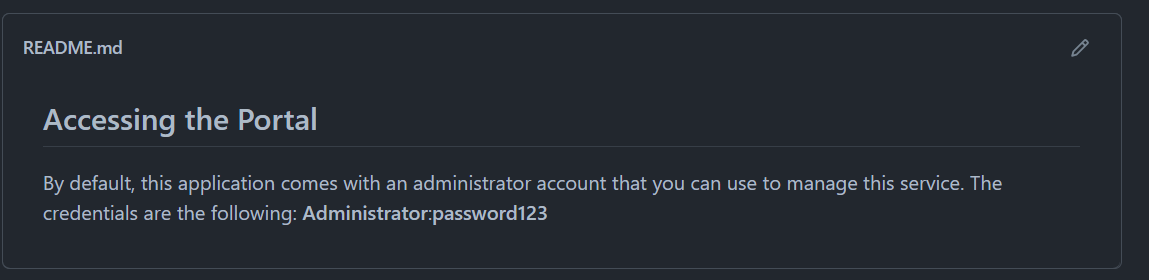

Sometimes, these credentials are stored in the web applications configuration files or public documentation. For example, this application, "BikeIT", is an open-source application for registering your Bicycle to a community page. The administrator is enabled by default, with credentials provided in both the documentation and source code.

The photo above shows the "Read Me" documentation (or instructions) for the web application.

$username="Administrator";

$password="letmein123"; //change this

The source code contains these default credentials too.

Unless the administrator installing this application changes the credentials, anyone will be able to log in using them if they know to look for these details being published (such as they usually are with open-source projects), or are capable of guessing them.

For today's task, you will need to deploy the vulnerable machine attached to this task by pressing the green "Start Machine" button at the top right of this task and the TryHackMe AttackBox, which can be deployed by pressing the "Start AttackBox" button located at the top-right of the room.

Additional Resources

If you are interested in learning more (or wish to apply your knowledge) about content discovery and authentication bypass, check out the following rooms on TryHackMe:

In your web browser, try some default credentials on the newly discovered login form for the "administrator" user. What is the password?

Access the admin panel. What is the value of the flag?

McSysAdmin managed to reset everyone's access except Santa's! Santa's expected some urgent travel itinerary for his route over Christmas. Rumour has it that Santa never followed the password security recommendations. Can you use bruteforcing to help him access his accounts?

Check out Nahamsec's walkthrough video for day 4 here

Learning Objectives

In today’s task, we’re going to learn the following.

- Understanding authentication and where it is used

- Understanding what fuzzing is

- Understanding what Burp Suite is and how we can use it for fuzzing a login form to gain access

- Apply this knowledge to retrieve Santa’s travel itinerary

What is authentication, and where is it Used?

Authentication is the process of verifying a user’s identity, establishing that they are who they say they are. Authentication can be proven using a variety of authentication means, including:

- A known set of credentials to the server and user such as a username and password

- Token authentication (these are unique pieces of encrypted text)

- Biometric authentication (fingerprints, retina data, etc.)

Authentication is often used interchangeably with authorisation, but it is very different. Authorisation is a term for the rules defining what an authenticated user can and cannot access. For example, a standard, the authenticated user will only be allowed access to some aspects of a website. An authenticated administrator will be able to access the entire thing – their level of authorisation determines this.

Authentication is used when there is a necessity to know who is accessing pieces of data or information to create accountability – protecting the Confidentiality element of the CIA triad. If you wish to learn more about this, I recommend checking out the Principles of Security room on TryHackMe. You can find examples of authentication in the real world, for example, a badge or key-card to access restricted places within a building.

What is Fuzzing?

Put simply, fuzzing is an automated means of testing an element of a web application until the application gives a vulnerability or valuable information. When we are fuzzing, we provide information as we would typically when interacting with it, just at a much faster rate. This means that we can use extensive lists known as wordlists to test a web application’s response to various information.

For example, rather than testing a login form for a valid set of credentials one-by-one (which is exceptionally time-consuming), we can use a tool for fuzzing this login form to test hundreds of credentials at a much faster rate and more reliably.

Fuzzing with Burp Suite

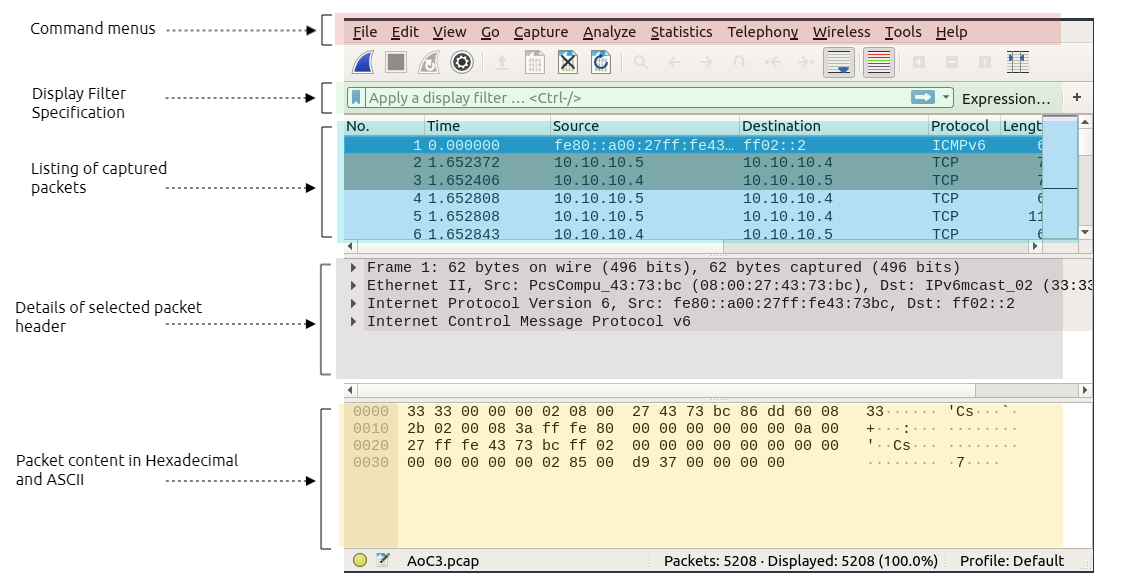

Please note that this task presumes that you are using the TryHackMe AttackBox. The AttackBox has the necessary software, tools and wordlists installed for you to complete today’s task – so it is highly recommended that you use it (to start it, scroll to the top of this page and click the blue "Start AttackBox" button, then follow the steps below).

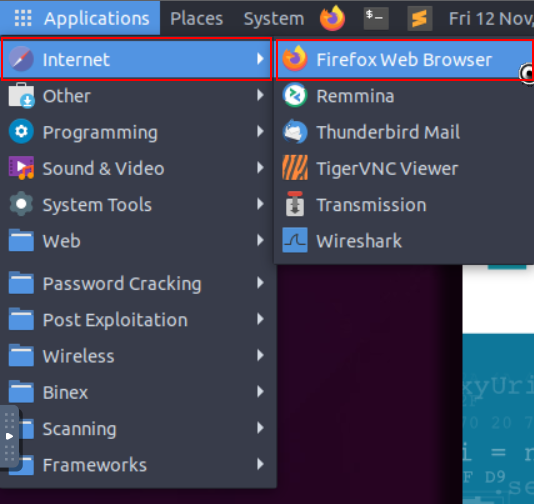

1. Let’s launch FireFox by navigating to “Applications -> Internet-> Firefox”

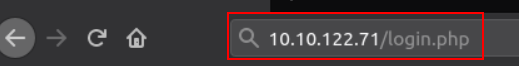

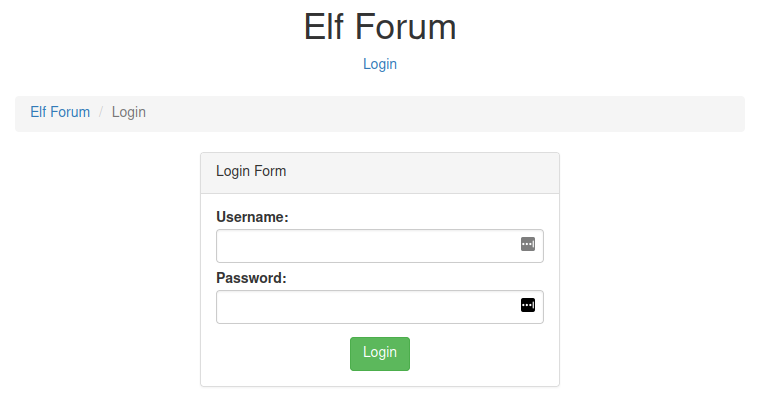

2. Navigate to the login form in the web browser using the vulnerable IP address (http://MACHINE_IP) (note that the IP address in the screenshots is only an example, you will need to replace this with the MACHINE_IP)

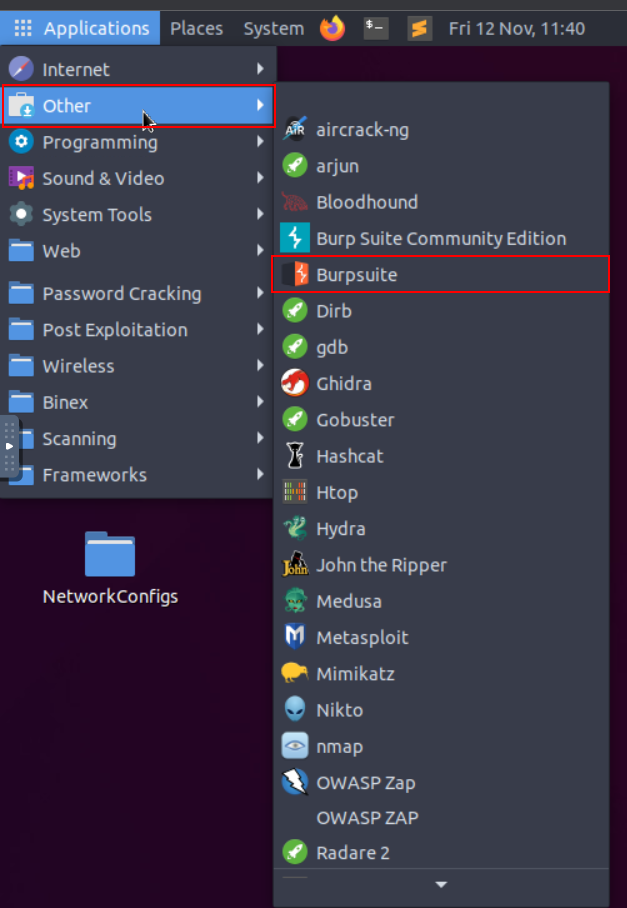

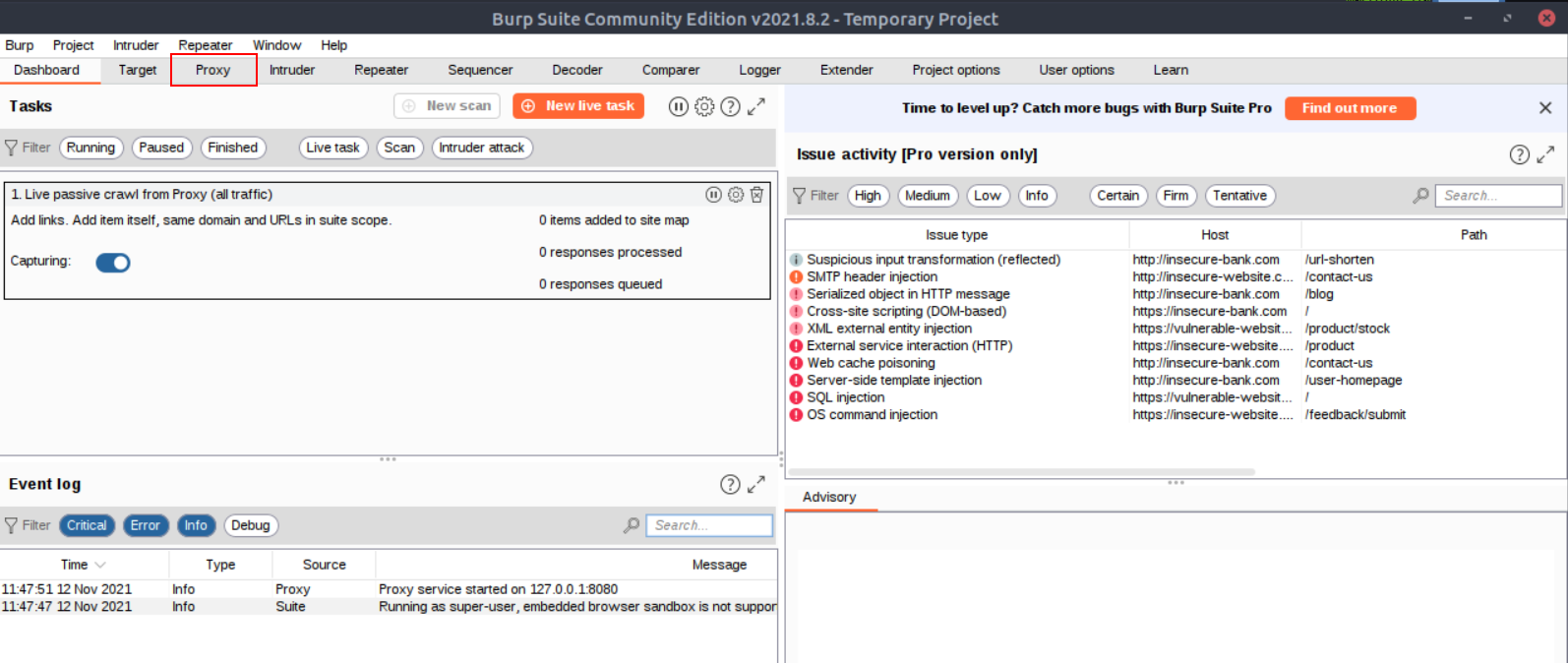

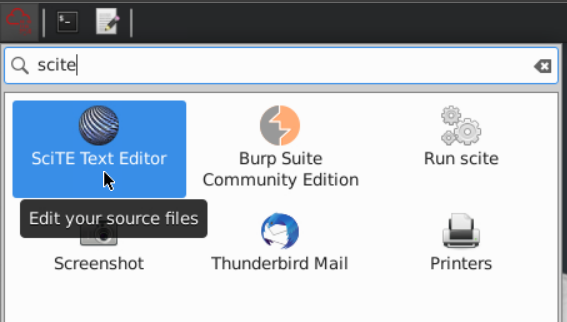

3. Let’s launch Burp Suite by navigating to “Applications -> Other -> Burp Suite"

4. Navigate to the "Proxy" tab:

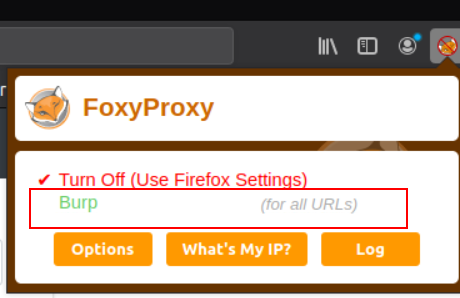

5. And press "Intercept On" in "Proxy -> Intercept"

6. Now we will need to return to our Firefox Browser and enable the FoxyProxy extension in Firefox. You can do this by pressing the “FoxyProxy” extension and pressing “Burp” like so:

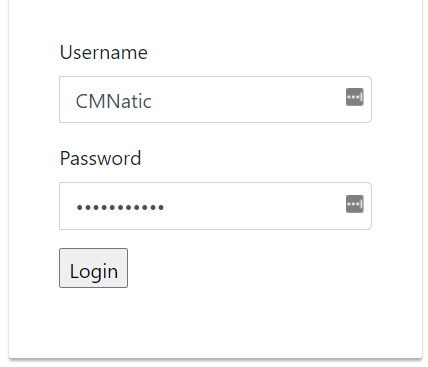

7. Submit some dummy data on the login form, such as below. Please note that the web browser will hang:

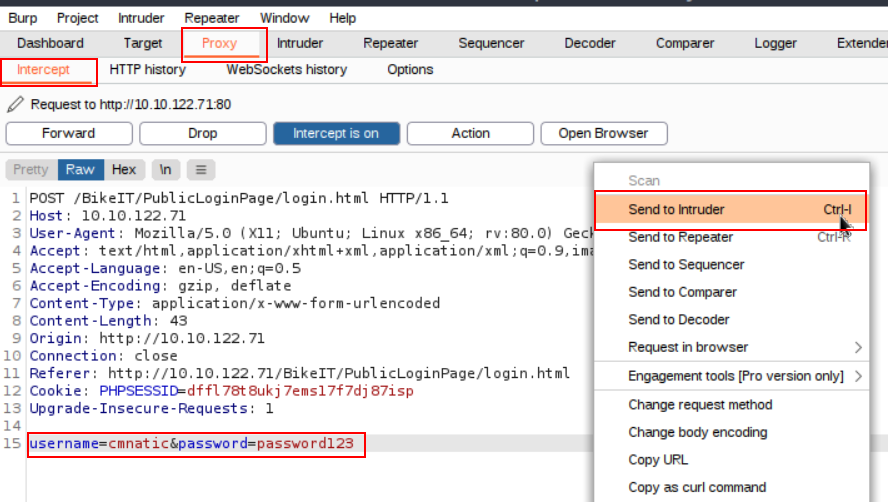

8. We will need to return to Burp Suite, where we will see some data has now been returned to us. Right-click the window containing the data and press “Send to Intruder”

9. Navigate to the “Intruder” tab and select the following:

10.1. Click the “Positions” tab and clear the pre-selected positions by pressing "Clear $"

10.2. Add the value of the "username" parameter as the first position, sometimes we will already know the username, other times we will not. We can tell Burp to use a wordlist of usernames for this position as well. However, as the username is already known (i.e. cmnatic), we are just brute-forcing the password. (this can be done by highlighting the password parameter and clicking “Add $”)

10.3. Add the “password” value as the position (i.e. password123) (this can be done by highlighting the value in the password parameter and clicking “Add $”)

10.4. Select “Cluster Bomb” in the Attack type in the dropdown menu.

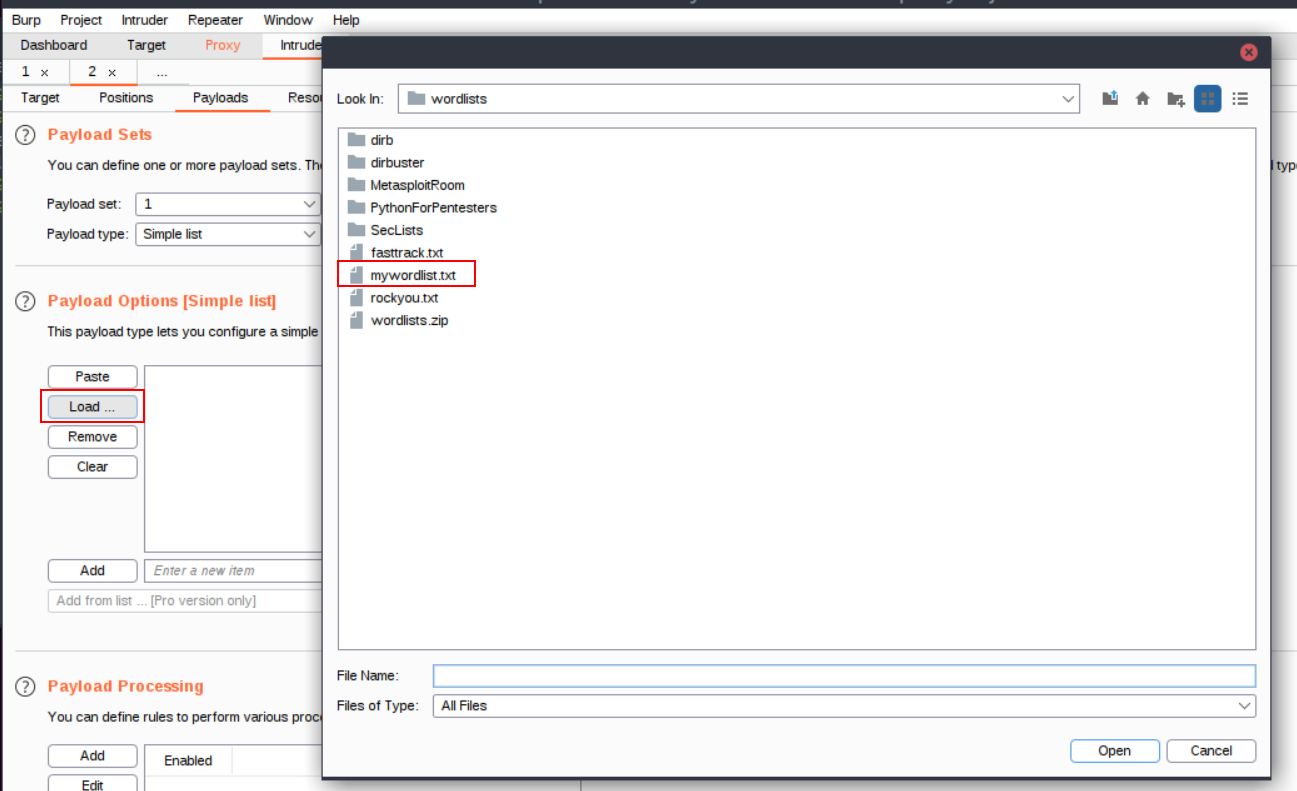

10.5. We will now need to provide some values for Burp Suite to fuzz the login form with passwords. We can provide a list manually, or we can provide a wordlist. I will be providing a wordlist.

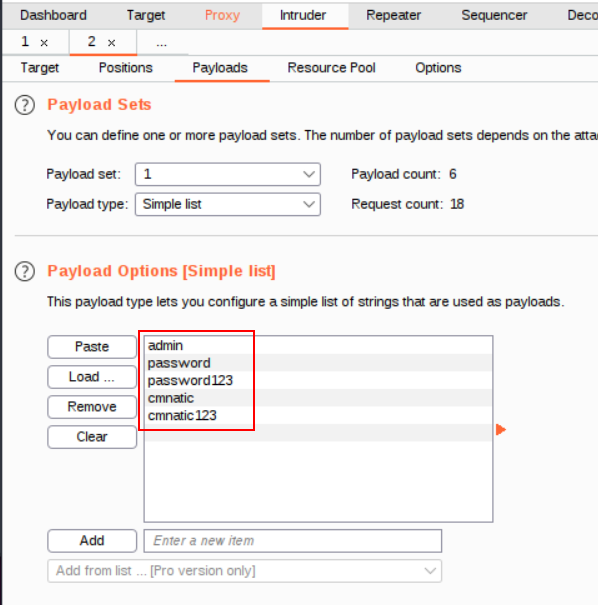

11. Now after selecting our wordlist, we can see that some passwords have filled the “Payload Options” window:

12. Now let’s press the “Start Attack” button, this will begin fuzzing the login form. Look at the results and sort by “Length”. Unsuccessful passwords will return a certain length, and successful passwords will return another length. You will need to examine the results with different lengths to determine the correct password in today’s task.

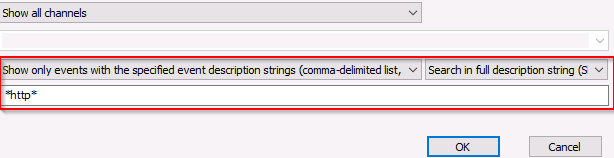

Navigate to the vulnerable login form at http://MACHINE_IP/ and apply the material for today's task to login to Santa's itinerary, using the username as "santa" and the password list located at /root/Rooms/AoC3/Day4/passwords.txt on the TryHackMe AttackBox (or download it from here for your payload.

Additional Resources

If you are interested in learning more about Burp Suite, check out the Burp Suite module on TryHackMe.

Configure Burp Suite & Firefox, submit some dummy credentials and intercept the request. Use intruder to attack the login form.

What valid password can you use to access the "santa" account?

What is the flag in Santa's itinerary?

Story

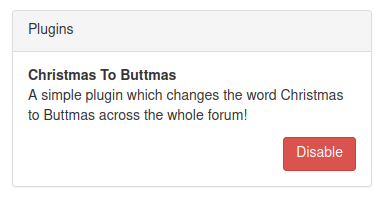

The Elf Forum is where all the elves express their joy and excitement about Christmas, but Grinch Enterprises has one bad admin account, and they've installed a plugin that changes all mentions of Christmas to Buttmas!! McSkidy needs to find that admin account and disable the plugin.

Learning Objectives

- What is an XSS vulnerability?

- What Types of XSS vulnerabilities are there?

- Challenge Walkthrough.

Check out Nahamsec's walkthrough video for day 5 here

What is an XSS vulnerability?

Cross-Site Scripting, better known as XSS in the cybersecurity community, is classified as an injection attack where malicious JavaScript gets injected into a web application with the intention of being executed by other users.

If you can get JavaScript to run on a victim's computer, there are numerous things you can achieve. This can range from stealing the victim's cookies to take over their session, running a keylogger that will log every key the user presses on their keyboard while visiting the website, redirecting the user to a totally different website altogether or performing some kind of action on the website such as placing an order, or resetting their password etc.

What types of XSS vulnerabilities are there?

XSS vulnerabilities fall into four different types; DOM, Reflected, Stored and Blind. Each type is quite a complex subject which we'll try and cover briefly here, but to gain a more in-depth understanding, you might want to try this room after completing the challenge https://tryhackme.com/room/xssgi

DOM:

DOM stands for Document Object Model and is a programming interface for HTML and XML documents. It represents the page so that programs can change the document structure, style and content. A web page is a document, and this document can be either displayed in the browser window or as the HTML source.

DOM Based XSS is where the JavaScript execution happens directly in the browser without any new pages being loaded or data submitted to backend code. Execution occurs when the website JavaScript code acts on input or user interaction. An example of this could be a website's JavaScript code getting the contents from the window.location.hash parameter and then write that onto the page in the currently being viewed section. The contents of the hash aren't checked for malicious code, allowing an attacker to inject JavaScript of their choosing onto the webpage.

Reflected:

Reflected XSS happens when user-supplied data in an HTTP request is included in the webpage source without any validation. An example of this could be an error message which is in a query string of an URL that is reflected onto the webpage. The URL could look something like the following:

https://website.thm/login?error=Username%20Is%20Incorrect

The error message could be replaced with JavaScript code which gets executed when a user visits the page.

Stored:

As the name infers, the XSS payload is stored on the web application (in a database, for example) and then gets run when other users visit the site or web page. This type of XSS can be particularly damaging due to the number of victims that may be affected. An example of this could be a blog that allows visitors to leave comments. If a visitor's message is not properly validated and checked for XSS payloads, then every subsequent visit to the blog page would run the malicious JavaScript code.

Blind:

Blind XSS is similar to a stored XSS in that your payload gets stored on the website for another user to view, but in this instance, you can't see the payload working or be able to test it against yourself first. An example of this could be a contact form. In the contact form, your message could contain an XSS payload, which when a member of staff views the message it gets executed.

Challenge Walkthrough

Click the green "Start Machine" button on the top right of this task. Once the machine has loaded, please click the following link:

https://LAB_WEB_URL.p.thmlabs.com (if you receive an error, wait 30 seconds and try refreshing the page)

Here you'll find the elves forum where they talk about and spread the joy of Christmas! As you explore the forum and click on the different topics and threads, you'll notice that every mention of Christmas has been changed to Buttmas! This is because the grinch has an admin account and has installed a plugin that changes every mention of Christmas to Buttmas! You'll need to take over the Grinch account and disable the plugin to restore the Christmas joy!

First, click the login link towards the top of the page and log in with the following credentials:

Username: McSkidy

Password: password

Now that you're logged in you'll notice the navigation bar has now changed from Login to Settings and Logout.

Click on the Settings link, and you'll notice a feature for changing McSkidy's login password. Try changing your password to pass123. When you do this, you'll notice your address bar changes to look like the below screenshot (yours will have the IP address MACHINE_IP instead). If you can somehow trick the Grinch into visiting this URL, it will change their password to pass123.

Let's now go back to the forum and visit one of the threads. If you scroll to the bottom, you'll notice that you are able to leave a comment. Try leaving the following comment:

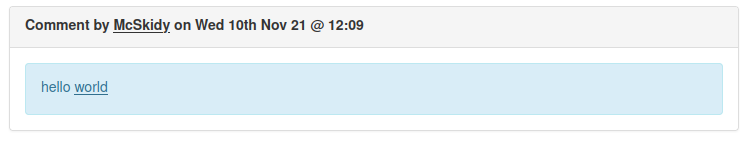

hello <u>world</u>

Once your comment is posted, you'll see that the word world has been underlined.

This means the comment is reflected as you wrote it without the underline HTML tags being stripped out. As this isn't being stripped out, you can try the HTML script tags instead to see if the website will run any JavaScript that is entered.

Using the URL, you found earlier for changing the user's password, you can try the following payload:

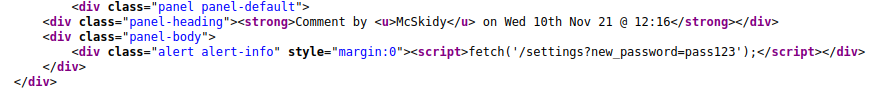

<script>fetch('/settings?new_password=pass123');</script>

The <script> tag tells the browser we want to run some JavaScript, and the fetch command makes a network request to the specified URL.

After posting the above as a comment, you could view the webpage source to see whether it has been shown correctly. The screenshot below shows us that the script tags aren't being stripped out or disabled and, in fact, are working:

Now that we have this XSS running on the forum, it means that any logged in users viewing the thread will automatically have their password changed to pass123. So let's log out and see if the Grinch has visited the thread by trying to log in as them (It may take up to a minute before the Grinch visits the page and their password changes).

Username: grinch

Password: pass123

Once logged in, go to the settings page again, and this time, you'll discover another feature with the option to disable the Christmas to Buttmas plugin.

Disable the plugin, and you'll be awarded a flag which can be entered below.

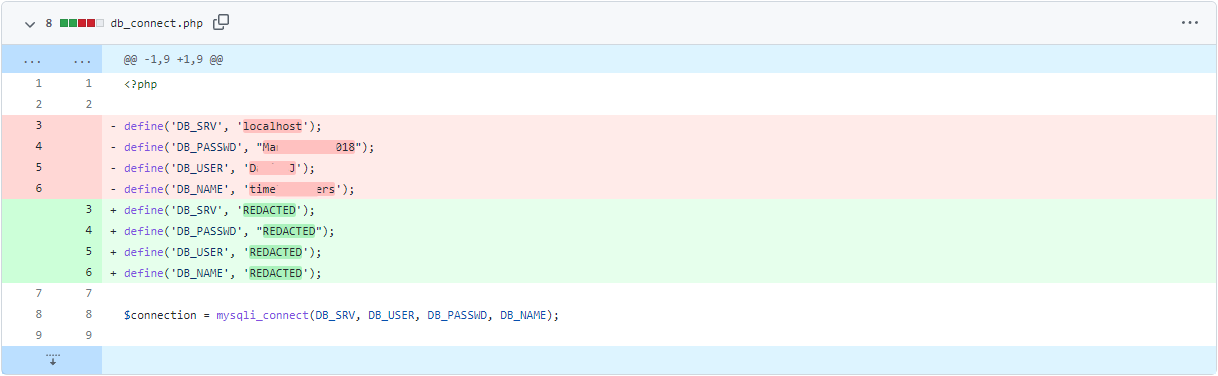

During a routine security audit before the Incident, McSkidy discovered some recovery passwords on an old server. She created a ticket to decommission this server to reduce this security vulnerability. The Elf assigned to fix this vulnerability kept pushing off the task, and this never got done. Luckily, some of those recovery keys can be used to save some systems.

Unfortunately, the only way to access the server is through an old web application. See if you can pull out those recovery keys to help McSkidy with her pursuit to save Christmas.

Check out HuskyHacks's walkthrough video for day 6 here

In this task, we will be covering the basics of a Local File Inclusion (LFI) vulnerability, including how to identify and test for LFI. We will also show the impact of an LFI vulnerability by exploiting it.

Let's begin by starting the machine attached to this task,

What is a Local File Inclusion (LFI) vulnerability?

An LFI vulnerability is found in various web applications. As an example, in the PHP, the following functions cause this kind of vulnerability:

- include

- require

- include_once

- require_once

It is a web application vulnerability that allows the attacker to include and read local files on the server. These files could contain sensitive data such as cryptographic keys, databases that contain passwords, and other private data. An LFI vulnerability happens due to a developer's lack of security awareness. In some cases, developers need to include the content of other local files within a specific page. Suppose a developer includes files without proper input validation. In that case, the LFI vulnerability will exist as a developer should never trust user input and keep all inputs from users to be filtered and sanitized. The main issue of these vulnerabilities is the lack of input validation, in which the user inputs are not sanitized or validated, and the user controls them.

What is the risk of LFI?

Once you find an LFI vulnerability, it is possible to read sensitive data if you have readable permissions on files. Thus, one of the most significant risks is leaking sensitive data accessed by a regular user. Also, in some cases, an LFI vulnerability could be chained to perform Remote Code Execution RCE on the server. If we can inject or write to a file on the system, we take advantage of LFI to get RCE. In this task, we prepared a web application with an LFI vulnerability and a possible way to get RCE. We'll be looking at this web application later.

Identifying and testing for LFI

Usually, attackers are interested in HTTP parameters to manipulate the input and inject attack payloads to see how the web application behaves. In general, if you are looking for an entry point to test web application attack types, then it is important to use the web app and check its functionalities. An entry point could be HTTP GET or POST parameters that pass an argument or data to the web application to perform a specific operation.

Parameters are query parameter strings attached to the URL that could be used to retrieve data or perform actions based on user input. The following graph explains and breaks down the essential parts of the URL.

For example, parameters are used with Google searching, where GET requests pass user input into the search engine. https://www.google.com/search?q=TryHackMe. If you are not familiar with the topic, you can view the How The Web Works module to understand the concept.

Once you find an entry point, we need to understand how this data could be processed within the application. After this point, you can start testing for certain vulnerability types using manual or automated tools. The following is an example of PHP code that is vulnerable to LFI.

<?PHP

include($_GET["file"]);

?>The PHP code above uses a GET request via the URL parameter file to include the file on the page. The request can be made by sending the following HTTP request: http://example.thm.labs/index.php?file=welcome.txt to load the content of the welcome.txt file that exists in the same directory.

In addition, other entry points can be used depending on the web application, and where can consider the User-Agent, Cookies, session, and other HTTP headers.

Now that we found our entry point, let's start testing for reading local files related to the operating system. The following are some Linux system files that have sensitive information.

/etc/issue /etc/passwd /etc/shadow /etc/group /etc/hosts /etc/motd /etc/mysql/my.cnf /proc/[0-9]*/fd/[0-9]* (first number is the PID, second is the filedescriptor) /proc/self/environ /proc/version /proc/cmdline

Let's start with basic testing of LFI. Once we identify the entry point or the HTTP parameter, we can begin testing and include OS files to see how the web application reacts. As a test case, we can always try /etc/passwd against Linux OS since it is readable for sure. We can also try to include using different techniques such as

- A direct file inclusion, which starts with /etc/passwd

- using .. to get out the current directory, the number of .. is varies depending on the web app directory.

- Bypassing filters using ....//.

- URL encoding techniques (such as double encoding)

You can review the topic in the file inclusion room for more information on these techniques.

http://example.thm.labs/page.php?file=/etc/passwd http://example.thm.labs/page.php?file=../../../../../../etc/passwd

http://example.thm.labs/page.php?file=../../../../../../etc/passwd%00

http://example.thm.labs/page.php?file=....//....//....//....//etc/passwd

http://example.thm.labs/page.php?file=%252e%252e%252fetc%252fpasswdOnce you have successfully viewed the content of the /etc/passwd file, you can test for other files.

Now, start the attached machine by using the green Start Machine button in this task, and apply what we discussed so far and answer the questions below. In order to access the website, you can either deploy the AttackBox or visit the following website link: https://LAB_WEB_URL.p.thmlabs.com.

Exploiting LFI

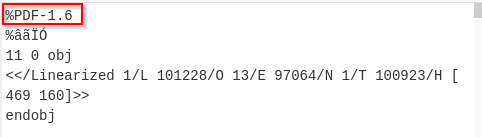

Exploiting an LFI sometimes is limited and depends on the web application server configuration. Besides reading sensitive data, often, we can obtain remote code execution. If we are dealing with a PHP web application, then we can use a PHP-supported Wrapper. For more information, visit the PHP manual page. PHP provides various methods of transmission of data (Input/Output stream) to allow PHP to read from. It will enable reading data via various data type channels.

PHP Filter

The PHP filter wrapper is used in LFI to read the actual PHP page content. In typical cases, it is not possible to read a PHP file's content via LFI because PHP files get executed and never show the existing code. However, we can use the PHP filter to display the content of PHP files in other encoding formats such as base64 or ROT13.

Let's try first reading the /etc/passwd file using the PHP filter wrapper.

http://example.thm.labs/page.php?file=php://filter/resource=/etc/passwdNow try to read the index.php file using a PHP filter; we get errors because the web server tries to execute the PHP code. To avoid this, we can use a PHP filter while base64 or ROT13 encoding the output as follows:

http://example.thm.labs/page.php?file=php://filter/read=string.rot13/resource=/etc/passwd

http://example.thm.labs/page.php?file=php://filter/convert.base64-encode/resource=/etc/passwdWe will try to use base64 for our scenario. As a result, we will get base64 encoded output as follows:

cm9vdDp4OjA6MDpyb290Oi9yb290Oi9iaW4vYmFzaApkYWVtb246eDox******DeletedTo read this text as plain text, you can use a Linux terminal or use one of the websites that decodes online, for example, www.base64decode.org.

Now, try to retrieve the index.php content, and answer question #3 below.

PHP DATA

The PHP wrapper is used to include raw plain text or base64 encoded data. It is used to include images on the current page. It is being used in LFI exploit.

Let's try to base64 encode "AoC3 is fun!" text to include it into the page using wrapper data:

user@machine$ echo "AoC3 is fun!" | base64 QW9DMyBpcyBmdW4hCg==

Also, we could decode a base64 as follows:

user@machine$ echo "QW9DMyBpcyBmdW4hCg==" | base64 --decode AoC3 is fun!

Now we can include our base64 data into the vulnerable page as follows,

http://example.thm.labs/page.php?file=data://text/plain;base64,QW9DMyBpcyBmdW4hCg==As a result, the page will show our lovely message, which is AoC3 is fun!. Using this technique, we can include PHP code into a page, by encoding the required PHP code and including it into PHP data wrapper. You can do some research to check them out!

Other PHP wrappers could be used in the LFI vulnerability. You can do some research to check them out!

As we mentioned before, we can gain remote command execution if we have the ability to write into a file or chain it with other vulnerability types. In this task, we will be using the vulnerable web application that we provided to perform an RCE via LFI.

LFI to RCE via Log files

It is also called a log poisoning attack. It is a technique used to gain remote command execution on the webserver. The attacker needs to include a malicious payload into services log files such as Apache, SSH, etc. Then, the LFI vulnerability is used to request the page that includes the malicious payload. Exploiting this kind of attack depends on various factors, including the design of the web application and server configurations. Thus, it requires enumerations, analysis, and an understanding of how the web application works. For example, a user can include a malicious payload into an apache log file via User-Agent or other HTTP headers. In SSH, the user can inject a malicious payload in the username section.

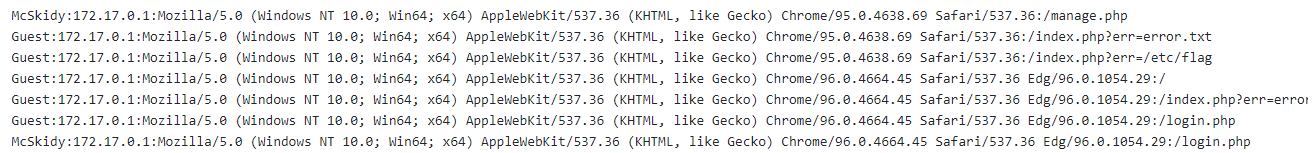

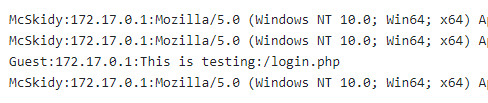

In this task, we provided a vulnerable web application that logs users' requests into a log file to which the webserver user has access. Once we log into the web application, we can visit the log page at https://LAB_WEB_URL.p.thmlabs.com/logs.php.

We can see that the log page stores four different headers, including username, IP address, User-Agent, and the visited page. The User-Agent is an HTTP header that includes the user's browser information to let servers identify the type of operating system, vendor, and version. The User-Agent is one of the HTTP headers that the user can control. Therefore, in order to get the RCE, you need to include PHP code into User-Agent and send a request to the log file using the LFI to execute in the browser. Now, let's test if we can include User-Agent value into the web application log file, and see if our record is recorded!

user@machine$ curl -A "This is testing" http://LAB_WEB_URL.p.thmlabs.com/login.phpOnce we send the HTTP request using curl, now using a registered user, we can check the log page to see if we can add the User-Agent that we sent.

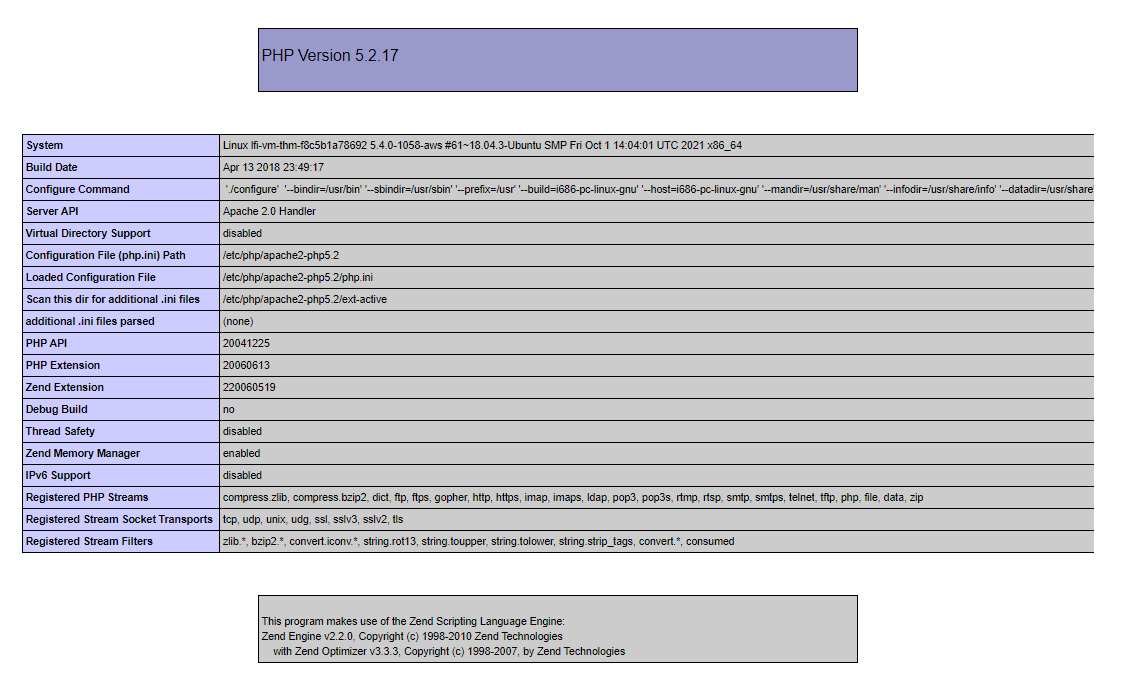

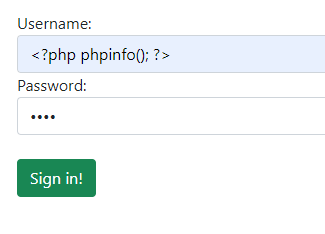

user@machine$ curl -A "<?php phpinfo();?>" http://LAB_WEB_URL.p.thmlabs.com/login.phpNow using the LFI, load the log file to get the PHP code executed. Note that it is important to visit the log file via LFI. Once you call the log file, we see the PHP information page.

Now it is practice time. We have to apply what we discussed to gain RCE. We have to include PHP code into the User-Agent and then use the LFI vulnerability called the log file to get your PHP code executed, then answer the question below.

LFI to RCE via PHP Sessions

The LFI to RCE via PHP sessions follows the same concept of the log poisoning technique. PHP sessions are files within the operating system that store temporary information. After the user logs out of the web application, the PHP session information will be deleted.

This technique requires enumeration to read the PHP configuration file first, and then we know where the PHP sessions files are. Then, we include a PHP code into the session and finally call the file via LFI. PHP stores session data in files within the system in different locations based on the configuration. The following are some of the common locations that the PHP stores in:

c:\Windows\Temp

/tmp/

/var/lib/php5

/var/lib/php/sessionOnce the attacker finds where PHP stores the session file and can control the value of their session, the attacker can use it to a chain exploit with an LFI to gain remote command execution.

If we enumerate and read the PHP configuration of the vulnerable web application we provided, we can see that it stores the PHP sessions into the /tmp directory. It also stores the value of the username into the session even if you are not logged in since this value is needed by the developer to use it in the logs function. We inject the PHP code into the user section in the following figure, stored in the PHP session file.

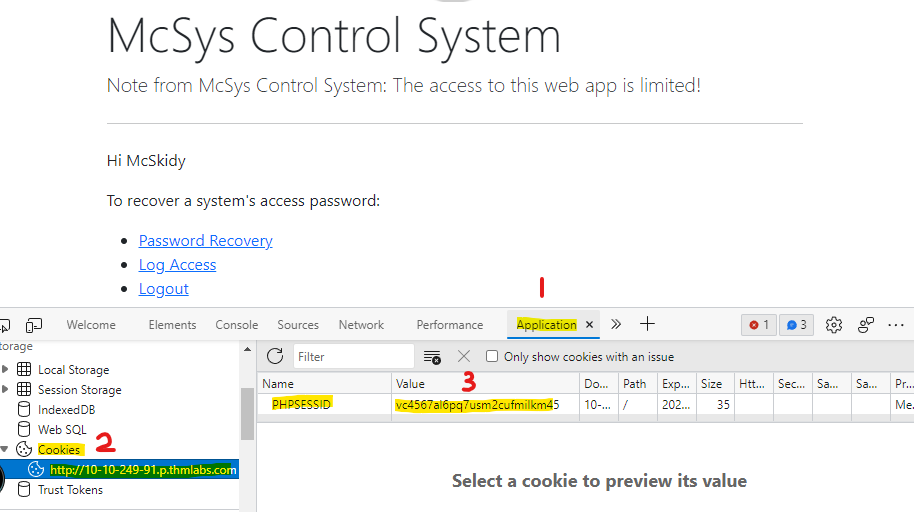

To find the PHP session file name, PHP, by default uses the following naming scheme, sess_<SESSION_ID> where we can find the SESSION_ID using the browser and verifying cookies sent from the server.

To find the session ID in the browser, you can open the developer tools (SHIFT+CTRL+I), then the Application tab. From the left menu, select Cookies and select the target website. There is a PHPSESSID and the value. In my case, the value is vc4567al6pq7usm2cufmilkm45. Therefore, the file will be as sess_vc4567al6pq7usm2cufmilkm45. Finally, we know it is stored in /tmp. Now we can use the LFI to call the session file.

https://LAB_WEB_URL.p.thmlabs.com/login.php?err=/tmp/sess_vc4567al6pq7usm2cufmilkm45As a result, we will have the PHP code that we injected into the username file shown on the page. Now apply what we discussed to gain RCE on the webserver using the LFI to RCE via PHP sessions. Now it is your turn to try to get RCE via PHP session.

Additional Resources

If you are interested in learning more about file inclusion vulnerabilities, check out the file inclusion room on TryHackMe.

Use the entry point to perform LFI to read the /etc/flag file. What is the flag?

Use the PHP filter technique to read the source code of the index.php. What is the $flag variable's value?

McSkidy forgot his login credential. Can you help him to login in order to recover one of the server's passwords?

Now that you read the index.php, there is a login credential PHP file's path. Use the PHP filter technique to read its content. What are the username and password?

Use the credentials to login into the web application. Help McSkidy to recover the server's password. What is the password of the flag.thm.aoc server?

The web application logs all users' requests, and only authorized users can read the log file. Use the LFI to gain RCE via the log file page. What is the hostname of the webserver? The log file location is at ./includes/logs/app_access.log.

Bonus: The current PHP configuration stores the PHP session files in /tmp. Use the LFI to call the PHP session file to get your PHP code executed.

The development team that handles gift requests from Children migrated over to a new technology stack. In doing so, they left their application vulnerable, and Grinch Enterprises now controls access to the system. Fortunately, Grinch enterprises forgot to patch the system so you can use the same vulnerability to retrieve the gift requests for the students.

Check out Tib3rius' walkthrough video for day 7 here

Please note: the video walkthrough uses the in-browser access to complete the room. The instructions in the task are slightly different and make use of the AttackBox/OpenVPN to ssh into the target machine.

Learning Objectives

- What is NoSQL?

- Understanding NoSQL database

- Understand Why NoSQL happens

- Understand what NoSQL injection is

- Using NoSQL Injection to bypass a login form

What is NoSQL

A NoSQL database refers to a non-relational database that is short for non SQL and Not only SQL. It is a data-storing and data-retrieving system. NoSQL databases are commonly used nowadays for big Data and IoT devices due to their powerful features such as fast queries, ease of use to developers, scale easily, and flexible data structure.

Today, we will cover the basic concepts of NoSQL databases and information gathering, enumeration, and exploiting NoSQL vulnerabilities.

Understanding NoSQL

Various types of NoSQL databases can be covered, including MongoDB, Couchbase, RavenDB, etc. However, in this task, we will focus on MongoDB database, a free and popular document-store NoSQL database.

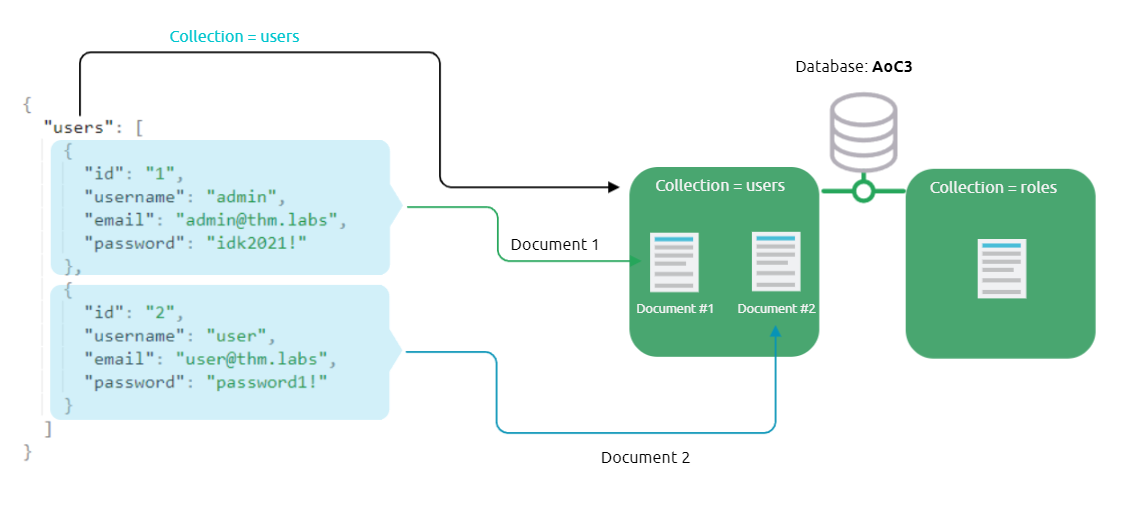

Similar to relational databases (such as MySQL and MSSQL), MongoDB consists of databases, tables, fields but with different names where

- Collections are similar to tables or views in MySQL and MSSQL.

- Documents are similar to rows or records in MySQL and MSSQL.

- Fields are similar to columns in MySQL and MSSQL.

The following graph shows a visual example of these terms as we have a database named AoC3 that has two collections: users, roles. The users collection has two documents (2 records). Documents in MongoDB are objects stored in a format called BSON, which supports JSON data types for document storing.

Also, it is useful to briefly look at and compare the query operators between MongoDB and MySQL:

- $and equivalent to AND in MySQL

- $or equivalent to OR in MySQL

- $eq equivalent to = in MySQL

Interacting with a MongoDB server

Note: To follow up with interacting with the MongoDB server, please deploy the attached machine and use the AttackBox or connect to the VPN in order to log into the SSH server. Use the following credentials:thm:tryhackme.

user@machine$ ssh thm@MACHINE_IP -p 2222

Before we dive into the details of NoSQL injection, it is important to understand how MongoDB works in a general sense. Let's start with connecting to MongoDB on our deployed target machine using the default port 27017. We will be creating a new database and collections as well as documents.

user@machine$ mongo

connecting to: mongodb://127.0.0.1:27017/?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("1273eab2-4dae-42a8-8f67-a133b870872b") }

MongoDB server version: 4.4.10

Now let's use the show command to list all the databases that we have in MongoDB on our target machine:

> show databases

admin 0.000GB

config 0.000GB

local 0.000GB

logindb 0.000GB

thm-test 0.000GB

thmdb 0.000GB

Next, if we want to connect to a database, we can do this by using the use command. However, we will also show how to create a new database with collections and data. To create a new database, we also use the same use command to create and connect to it. Therefore, the use command is used to connect to a database if it exists or create a new one if it doesn't exist. Once the database is created, we can create two new collections, named users and roles using the db.createCollection() function, and then show all available collections within the database.

> use AoC3

switched to db AoC3

> db.createCollection("users")

{ "ok" : 1 }

> db.createCollection("roles")

{ "ok" : 1 }

> db.getCollectionNames();

[ "roles", "users" ]

Next, we create a document within the users collection and insert data into it.

> db.users.insert({id:"1", username: "admin", email: "admin@thm.labs", password: "idk2021!"})

WriteResult({ "nInserted" : 1 })

> db.users.insert({id:"2", username: "user", email: "user@thm.labs", password: "password1!"})

WriteResult({ "nInserted" : 1 })

>

> db.users.find()

{ "_id" : ObjectId("6183dc871ebe3f0c4b779a31"), "id" : "1", "username" : "admin", "email" : "admin@thm.labs", "password" : "idk2021!" }

{ "_id" : ObjectId("6183dc911ebe3f0c4b779a32"), "id" : "2", "username" : "user", "email" : "user@thm.labs", "password" : "password1!" }

We successfully created two documents into users collection and then showed available documents within the collection using db.users.find(). Note that MongoDB automatically creates a unique ID called _id for each document within the collection from the above output. Let's also try to update the document as well as delete it using MongoDB commands. We will be using the db.<collection>.update function to update the document with id=2 and update the username to be tryhackme and finally shows all documents to make sure our document gets updated.

> db.users.update({id:"2"}, {$set: {username: "tryhackme"}});

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

> db.users.find()

{ "_id" : ObjectId("6183dc871ebe3f0c4b779a31"), "id" : "1", "username" : "admin", "email" : "admin@thm.labs", "password" : "idk2021!" }

{ "_id" : ObjectId("6183dc911ebe3f0c4b779a32"), "id" : "2", "username" : "tryhackme", "email" : "user@thm.labs", "password" : "password1!" }

Finally, let's remove the document using db.users.remove() and then drop db.users.drop() the collection as follows,

> db.users.remove({'id':'2'})

WriteResult({ "nRemoved" : 1 })

> db.users.find()

{ "_id" : ObjectId("6183dc871ebe3f0c4b779a31"), "id" : "1", "username" : "admin", "email" : "admin@thm.labs", "password" : "idk2021!" }

> db.users.drop()

true

Now that we know how to interact with the MongoDB server, look around by using the MongoDB commands we've learned, and find the flag to answer Question 1!

What is NoSQL Injection?

Now that we have the basic knowledge of dealing with MongoDB commands to create databases and collections as well as insert, update, and delete documents, we will be discussing the NoSQL injection and the risks of having such vulnerability in the application. NoSQL injection is a web security vulnerability that allows the attacker to have control over the database. A NoSQL injection happens by sending queries via untrusted and unfiltered web application input, which leads to leaked unauthorized information. In addition, the attacker can use the NoSQL injection to perform various operations such as modifying data, escalating privileges, DoS attacks, and others.

Bypassing login pages!

The logic of login pages is similar in most databases: first, connect to the database and then look for a certain username and password; if they exist in the collection (in the database), then we have a valid entry. The following is the query that is used in the web applications used on our login page: db.users.find({query}) or db.users.findOne(query) functions where the query is JSON data that's send via the application: {"username": "admin", "password":"adminpass"}. Note that when we provide the correct credentials, a document returns, while a null reply is received when providing the wrong credentials when nothing matches!

> db.users.findOne({username: "admin", password: "adminpass"})

{

"_id" : ObjectId("6183ef6292dea43d75f0c820"),

"id" : "1",

"username" : "admin",

"email" : "admin@thm.labs",

"password" : "adminpass"

}

> db.users.findOne({username: "admin", password: "adminpasss"})

null

Before exploiting the NoSQL injection, there are MongoDB operators that we need to be familiar with that are heavily used in the injections, which are:

$eq - matches records that equal to a certain value

$ne - matches records that are not equal to a certain value

$gt - matches records that are greater than a certain value.

$where - matches records based on Javascript condition

$exists - matches records that have a certain field

$regex - matches records that satisfy certain regular expressions.

Visit the MongoDB website for more information about the MongoDB operators. Next, we will be exploiting the logic of the login query by injecting a JSON object which includes one of the NoSQL operators, which is $ne.

> db.users.findOne({username: "admin", password: {"$ne":"xyz"}})

{

"_id" : ObjectId("6183ef6292dea43d75f0c820"),

"id" : "1",

"username" : "admin",

"email" : "admin@thm.labs",

"password" : "adminpass"

}

We injected a JSON objection {"$ne": "XYZ"} in the password field, and we changed the logic to become as follows:

- We are telling MongoDB to find a document (user) with a username equal to admin and his password is not equal to xyz, which turns this statement to TRUE because the admin's password is not xyz.

As a result, we have successfully retrieved the document from MongoDB since our statement's logic is true. By applying this concept against a login page of a web application, we will be able to bypass the login page.

Now let's say if we wanted to log in to a system as another user who is not admin. In this case, we can also inject into a username field to be as follows,

> db.users.findOne({username:{"$ne":"admin"},password:{"$ne":"xyz"}})

{

"_id" : ObjectId("6183ef5b92dea43d75f0c81f"),

"id" : "2",

"username" : "user",

"email" : "user@thm.labs",

"password" : "password1!"

}

If this were a login page, we would be logged in as a not admin, which is the user. We injected two JSON objects into a username as well as password fields: we are telling MongoDB to find a document that its username is not equal to admin and its password is not equal to xyz, which returns the statement as true.

Exploiting NoSQL injection

To exploit NoSQL injection within the web application, first, you need to find an entry point that doesn't sanitize the user's input. Next, you need to understand how the web application passes the request to the database! Once you find an entry point, passing queries could be varied. Sometimes, the web app accepts the user's input via GET or POST queries, and sometimes web applications accept a JSON object, as is the case with APIs.

Injecting a NoSQL query has different forms if we deal with GET or POST queries than JSON objects but still have the same concept. Let's discuss how to inject into regular GET or POST requests. To interact with MongoDB via GET or POST is by injecting an array of the MongoDB operator to match the JSON objection to match the Key: Value. The following is an example of how to inject via URL:

http://example.thm.labs/search?username=admin&role[$ne]=userNote that we inject the MongoDB operator [$ne] (not equal) in the role parameter. We can also use the same concept to pass the MongoDB operators in the POST requests.

We built a playground web application to search for the user(s) with a specific role! To perform NoSQL injection, first, you need to bypass the login page, as we discussed in the previous section.

Let's see the normal case where we search for username is equal ben with the user role.

http://example.thm.labs/search?username=ben&role=userAs a result, the web application returns the expected result from MongoDB. Now let's inject MongoDB to show unexpected results! We will try to list all usernames that have a user role!

http://example.thm.labs/search?username[$ne]=ben&role=userAs a result, the web application will return many records from MongoDB that satisfy the query. It is practice time; now make sure you have deployed the attached machine and the AttackBox (to use Burp), try to list all usernames that have guest roles. Find the flag and answer question #2 below

You can access and visit the website via the following link: http://LAB_WEB_URL.p.thmlabs.com or http://MACHINE_IP in case you're using the AttackBox.

Additional Resources

If you are interested in learning more about injection vulnerabilities, check out the SQL injection and Command Injection rooms on TryHackMe.

We discussed how to bypass login pages as an admin. Can you log into the application that Grinch Enterprise controls as admin and retrieve the flag?

Use the knowledge given in AoC3 day 4 to setup and run Burp Suite proxy to intercept the HTTP request for the login page. Then modify the POST parameter.

Once you are logged in, use the gift search page to list all usernames that have guest roles. What is the flag?

Use the gift search page to perform NoSQL injection and retrieve the mcskidy record. What is the details record?

Check out John Hammond's walkthrough video for day 8 here

McSkidy was notified of some terrible news! Santa's laptop, which he uses to prepare his bag of toys for Christmas, is missing! We believe a minion at the Grinch Enterprise stole it, but we need to find out for sure. It is up to us to determine what actor compromised the laptop and recover Santa's bag of toys!

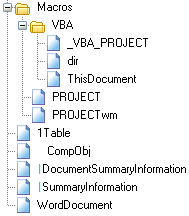

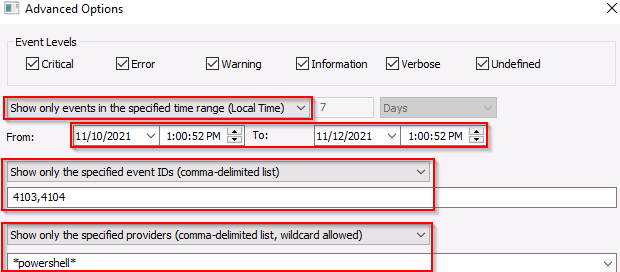

Unfortunately, The Best Festival Company had minimal monitoring tools on Santa's laptop (he is the boss, after all)! All we have to work with are some PowerShell Transcription Logs we were able to remotely recover just after it went missing. You can find the transcription logs within the SantasLaptopLogs folder on the Desktop of the attached Windows virtual machine.

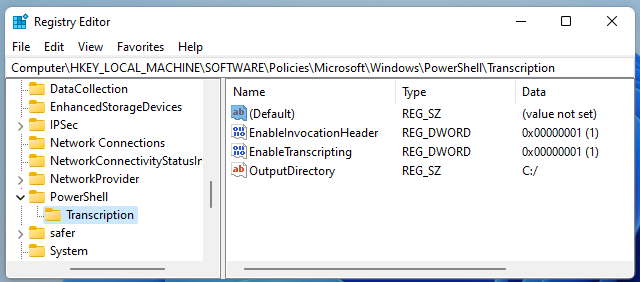

If you aren't familiar, PowerShell Transcription Logs capture the input and output of Windows PowerShell commands, allowing an analyst to review what happened when. Typically, PowerShell Transcription can be enabled by Group Policy, but another method to turn on this logging is by configuring the Windows Registry.

While you do not have to use these commands for this task, these will turn on PowerShell Transcription Logging for a local host if entered in an Administrator command prompt:

reg add HKEY_LOCAL_MACHINE\Software\Policies\Microsoft\Windows\PowerShell\Transcription /v EnableTranscripting /t REG_DWORD /d 0x1 /f reg add HKEY_LOCAL_MACHINE\Software\Policies\Microsoft\Windows\PowerShell\Transcription /v OutputDirectory /t REG_SZ /d C:/ /f reg add HKEY_LOCAL_MACHINE\Software\Policies\Microsoft\Windows\PowerShell\Transcription /v EnableInvocationHeader /t REG_DWORD /d 0x1 /f

The Windows Registry is a large database of operating system settings and configurations. It is organized by "hives", with each hive containing "keys" and their corresponding "values." PowerShell Transcription Logging can be enabled in this way "per-user" via the HKEY_CURRENT_USER registry hive, or across the entire host via the HKEY_LOCAL_MACHINE registry hive. Thankfully, Santa's laptop had this enabled machine-wide!

Note that for this task, you will interact with a Windows virtual machine to perform your analysis. For the sake of storyline, this is not Santa's laptop... rather, you have sample files that were recovered before the laptop was stolen.

Additional Resources

If you are interested in learning more about the Windows Fundamentals, check out the Windows Fundamentals module on TryHackMe.

Read the premise above, start the attached Windows analysis machine and find the transcription logs in the SantasLaptopLogs folder on the Desktop.

If you want to RDP into the machine, start the AttackBox and enter the following into a terminal: xfreerdp /u:Administrator /p:grinch123! /v:MACHINE_IP - The credentials for the machine are Administrator as the username, and grinch123! as the password.

Each transcription log is a simple plain text file that you can open in any editor of your choice. While the filenames are random, you can get an idea as to which log "comes first" by looking at the Date Modified or Date Created attributes, or the timestamps just before the file extension!

Open the first transcription log. You can see the commands and output for everything that ran within PowerShell, like whoami and systeminfo!

What operating system is Santa's laptop running ("OS Name")?

Review each transcription log to get an idea for what activity was performed on the laptop just after it went missing. In the "second" transcription log, it seems as if the perpetrator created a backdoor user account!

What was the password set for the new "backdoor" account?

In one of the transcription logs, the bad actor interacts with the target under the new backdoor user account, and copies a unique file to the Desktop. Before it is copied to the Desktop, what is the full path of the original file?

The actor uses a Living Off The Land binary (LOLbin) to encode this file, and then verifies it succeeded by viewing the output file. What is the name of this LOLbin?

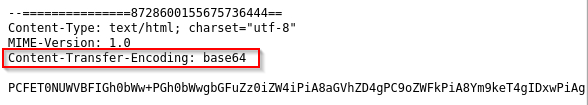

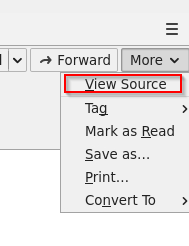

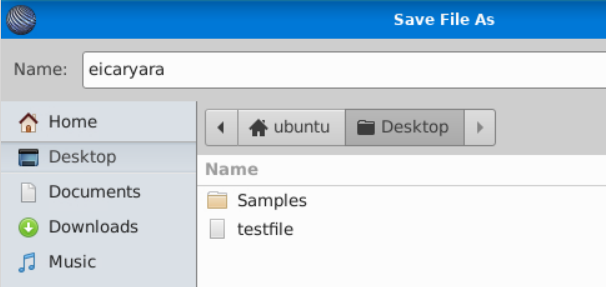

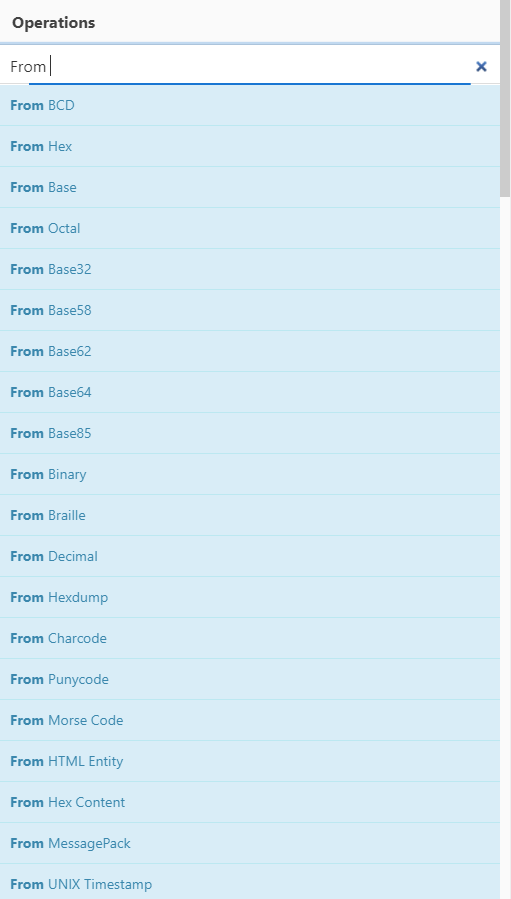

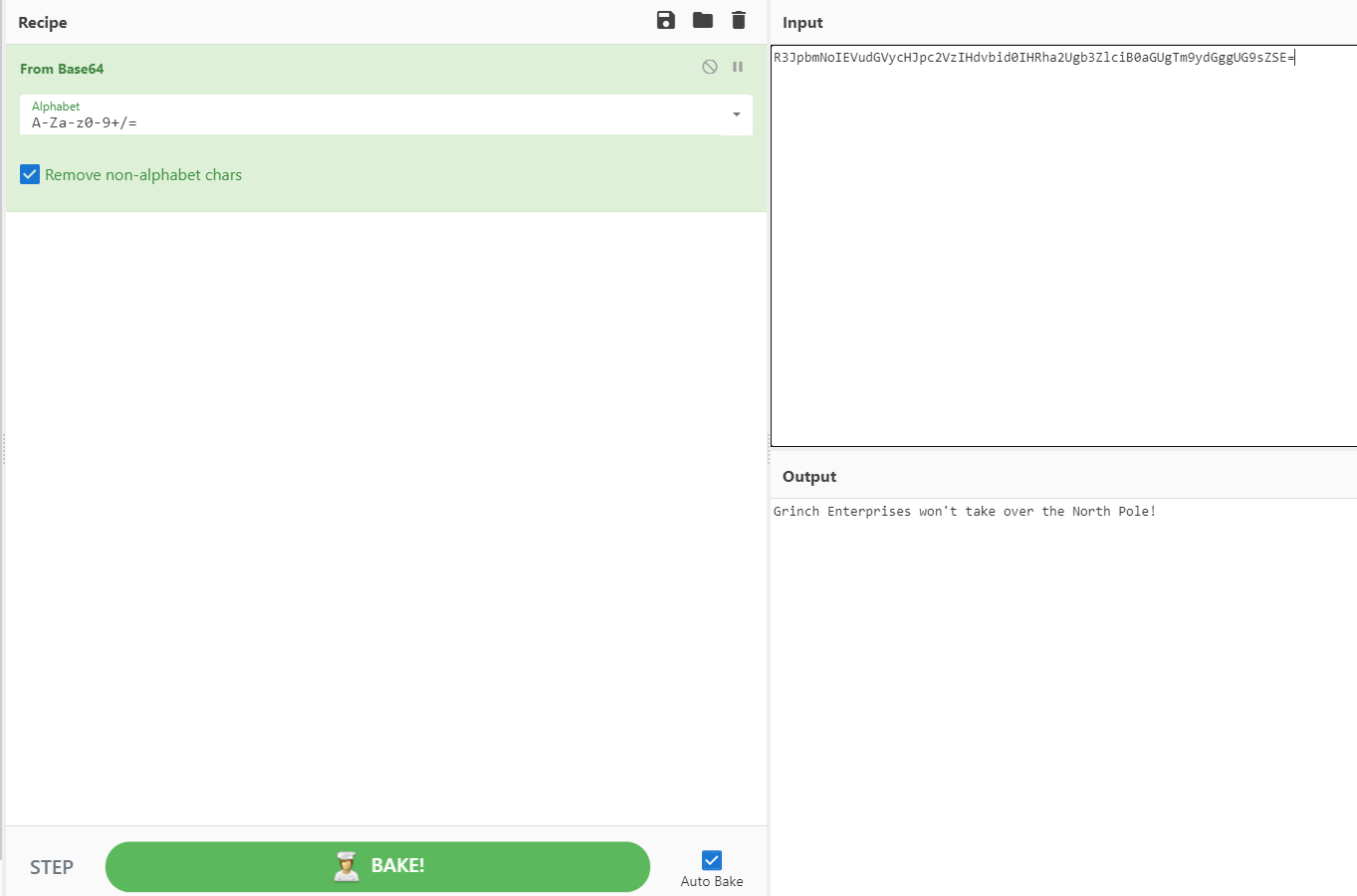

The UsrClass.dat file was encoded with Base64, which thankfully, you can decode and recover the original UsrClass.dat file! Base64 decode the contents between the -----BEGIN CERTIFICATE----- and -----END CERTIFICATE----- markers within the transcription log with CyberChef, which you have a local copy of on the Desktop of your analysis machine.

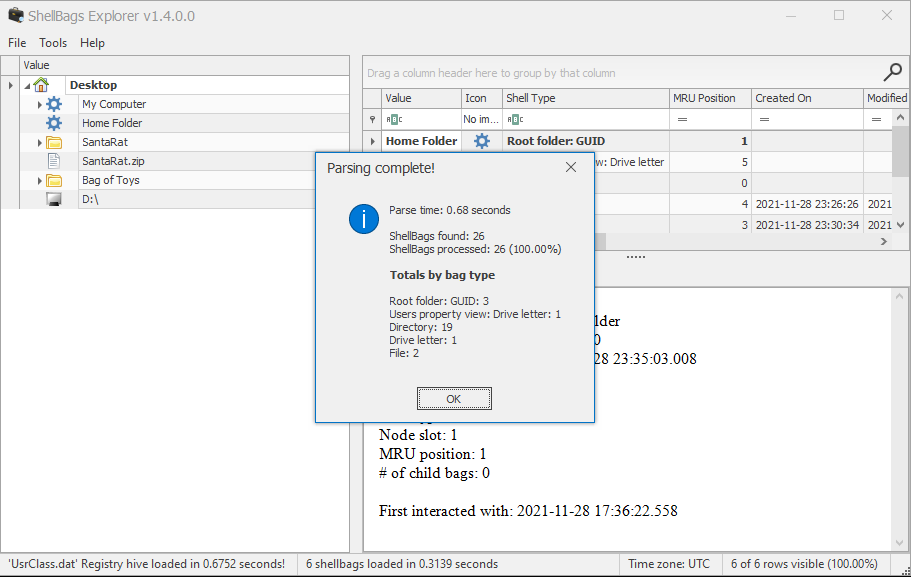

This file can be used to aid in our investigation. The UsrClass.dat file contains "Shellbags," or artifacts contained within the Windows registry that store user preferences while viewing folders within the Windows Explorer GUI. If you could carve out this information, you could get an idea as to what user activity was performed on the laptop before it was stolen or compromised! For more details and information on Shellbags, you are strongly encouraged to do some extra reading or research. :)

To extract the Shellbags information within this UsrClass.dat file, we will use the "Shellbags Explorer" graphical utility put together by Eric Zimmerman. This utility is found readily available inside the ShellBagsExplorer folder on the Desktop of your Windows machine, with the application name ShellBagsExplorer.exe .

Read the above and open the ShellBagsExplorer.exe application found in the folder on your Desktop.

With ShellBagsExplorer.exe open, use the top-bar menu to select File -> Load offline hive and navigate to the location of where you saved the decoded UsrClass.dat . Load in the UsrClass.dat file and begin to explore the Shellbags discovered!

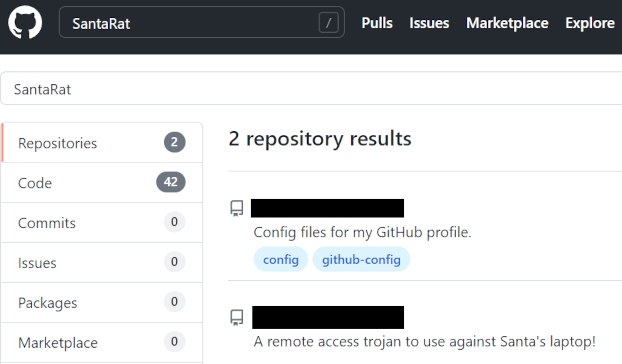

Under the Desktop folder, there seems to be a suspicious folder named "SantaRat". Could this be a remote access trojan, that was used for further nefarious activity on Santa's laptop? Unfortunately, from just Shellbags alone, we only have insight into folder names (sometimes files, if we are lucky) and column data within Windows Explorer, but not files... how could we uncover more details?

Drill down into the folders and see if you can find anything that might indicate how we could better track down what this SantaRat really is. What specific folder name clues us in that this might be publicly accessible software hosted on a code-sharing platform?

Additionally, there is a unique folder named "Bag of Toys" on the Desktop! This must be where Santa prepares his collection of toys, and this is certainly sensitive data that the actor could have compromised. What is the name of the file found in this folder?

Track down this SantaRat software online. It may be just as simple as searching for the name of the software on the suggested website (Github).

Note that the TryHackMe Windows analysis machine does not have Internet access, so you will need to explore in your own web browser.

What is the name of the user that owns the SantaRat repository?

Explore the other repositories that this user owns. What is the name of the repository that seems especially pertinent to our investigation?

Read the information presented in this repository. It seems as if the actor has, in fact, compromised and tampered with Santa's bag of toys! You can review the activity in the transcription logs. It looks as if the actor installed a special utility to collect and eventually exfiltrate the bag of toys. What is the name of the executable that installed a unique utility the actor used to collect the bag of toys?

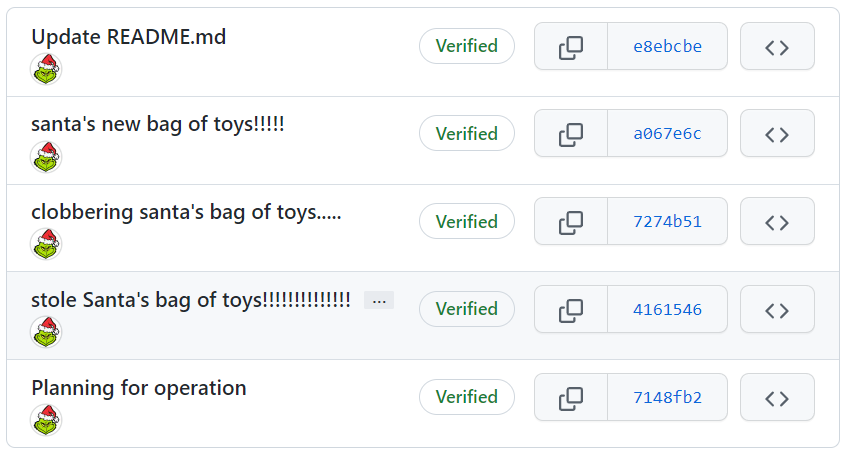

In the last transcription log, you can see the activity that this actor used to tamper with Santa's bag of toys! It looks as if they collected the original contents with a UHA archive. A UHA archive is similar to a ZIP or RAR archive, but faster and with better compression rates. It is very rare to see, but it looks the Grinch Enterprises are pulling out all the tricks!

You can see the actor compressed the original contents of the bag of toys with a password. Unfortunately, we are unable to see what the specific password was in these transcription logs! Perhaps we could find it elsewhere...

Following this, the actor looks to have removed everything from the bag of toys, and added in new things like coal, mold, worms, and more! What are the contents of these "malicious" files (coal, mold, and all the others)?

We know that the actor seemingly collected the original bag of toys. Maybe there was a slight OPSEC mistake, and we might be able to recover Santa's Bag of Toys! Review the actor's repository for its planned operations... maybe in the commit messages, we could find the original archive and the password!

What is the password to the original bag_of_toys.uha archive? (You do not need to perform any password-cracking or bruteforce attempts)

McSkidy was able to download and save a copy of the bag_of_toys.uha archive, and you have it accessible on the Desktop of the Windows analysis machine. After uncovering the password from the actor's GitHub repository, you have everything you need to restore Santa's original bag of toys!!

Double-click on the archive on the desktop to open a graphical UHARC extraction utility that has been prepared for you. Using the password you uncovered, extract the contents into a location of your choosing (you might make a "Bag of Toys" directory on the Desktop to save all the files into).